Share this

Comparing WebRTC with HTTP-based streaming

by Pieter-Jan Speelmans on March 25, 2021

Reducing streaming latency has been a hot topic for a while. Historically with HTTP based streaming, latencies of tens of seconds and even in the order of one minute is not unheard of. With traditional broadcast being in the realm of single digit seconds and new techniques such as WebRTC showing latency can be down to sub second, the question is of course which approach to pick. In this article we’ll dive into the how of WebRTC, and compare it with HTTP from the viewpoint of a streaming service. Let’s dive in!

A quick recap on the 'why' of HTTP based streaming

We used to have RTMP, the Real Time Messaging Protocol. It was used for media delivery, and gave you sub second latencies. But when HTTP based streaming protocols (such as Adobe HDS, Microsoft Smooth Streaming, Apple’s HTTP Live Streaming and MPEG-DASH) became more available, they nearly replaced all of the RTMP delivery to end viewers. The cost? An extremely high latency, often 40 or more seconds, and that’s just talking about the latency in the protocol.

So as an industry, why did we switch to these protocols? There are actually a number of reasons. (Some of you will without doubt think about the iOS App Store restrictions, heavily promoting the usage of HLS, but that’s not the main one.) The main reason for this is scalability. HTTP based streaming is in essence a very simple approach: you chop a video up in different files and serve everyone as if it was a static asset. In this sense, it turns live streaming into a sequence of files being loaded in exactly the same way as if loading images or scripts on a website. (For more insights, check our article on how HLS works here.) In contrast, streaming protocols such as RTMP require active servers, keeping a connection open between the server and client for the duration of the session.

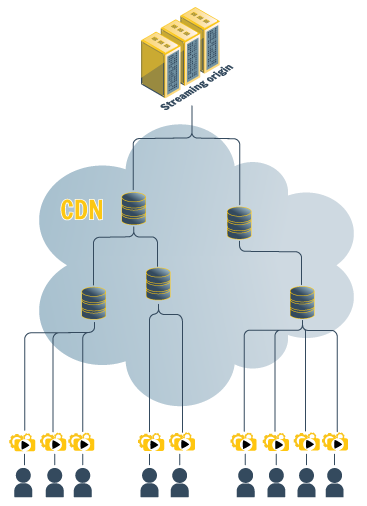

When the web was growing, it had to scale. In order to scale, easy to operate caching servers were installed, which could store commonly asked files and serve them out with need for hefty calculations or keeping connections to clients open for long times. It sparked the creation of large CDN providers, allowing anyone with a base web server to start serving large audiences. By leveraging static files for streaming, scaling became suddenly easy. There is no need to install and coordinate high amounts of relatively expensive servers or run complex software, but instead “off the shelf” CDN services can be used, scaling to millions of viewers (of course it’s not THAT easy, but you get the gist of it).

For streaming we have seen something similar. As audiences grow, scale becomes more important. (Our State of the Industry guide will inform you on what to look at for in 2021.) If you add on top of that the new abilities compared to RTMP, that comes with HTTP based protocols such as Adaptive BitRate switching (ABR) . Which allows from their end to better tune to the vastly different network conditions of all of those viewers. We can mark some significant pro’s in the HTTP based streaming column. Today the number of viewers keeps growing and most streaming delivery is done through HTTP based protocols as a result of their advantages.

If HTTP based streaming is so great, why have WebRTC?

WebRTC is an extremely powerful standard with great tools behind it, and a lot of industry support. It was developed to support real-time communication capabilities (RTC, get it?) to applications, allowing for video, audio or any other time of data to be sent between peers. This is a significant difference when comparing with HTTP based streaming, which is client-server oriented.

With WebRTC, it suddenly became possible to easily build voice- and video-communication solutions between clients, even working from within your browser. Think about how “easy” it has become to use video chat when working from home. Odds are extremely high you will be using WebRTC when on a call. If I would need to build something similar, without a doubt WebRTC would be the first idea on the table.

In essence, WebRTC is a lot more advanced and a lot more complex compared to standard server to client HTTP based streaming. This makes sense. With WebRTC connections are set up between clients and in normal setups, servers are only used to coordinate peers connecting to each other. There are all kinds of challenges which pop up when trying to connect users potentially sitting behind a massive firewall, on all kinds of different devices, on all kinds of networks needing to both send and receive data. Receiving data from a managed server is easy, but sending data to another client, that’s a lot more complicated. WebRTC does bring an answer to these challenges making use of a combination of different techniques. It makes it an ideal approach when the need to connect multiple clients in your network directly is a must-have.

The capability to send data between peers also opens up new options, for example having one viewer send media data to another, relieving stress from the server. There are plenty of examples of media focussed “peer-CDNs” which make it even easier to scale media distribution (and reduce normal CDN costs by leveraging your viewers’ bandwidth instead of the CDN’s). In this article however, we’re not going to focus on that.

How can WebRTC be used for streaming?

Let’s focus on actually delivering video with WebRTC in a server to client kind of approach. When looking at more of a server to client kind of setup (and making the server act as a peer as well), everything becomes easier. The server side of the equation can be controlled easily, removing the need for WebRTC TURN servers (which are server proxies in case peers are behind a restrictive firewall, but of course you can control this on your server) and allowing to collapse STUN servers (the servers used to open a port/connection) and messaging servers (to share connection data on other peers, or in this case the servers acting as peers) into the actual streaming servers. On the other hand, WebRTC does still require active stateful connections, which has an impact on scalability.

By deploying streaming servers as peers within a WebRTC connection, one can leverage WebRTC to do traditional server to client streaming. With the cost of active connections, this does mean streaming services using WebRTC will need a dedicated kind of CDN. One which doesn’t come cheap. And you will either be paying a lot to have large instances of servers, or you will require a lot of servers. As an example, if you would assume a certain server instance with a specific size could serve an amount of “X” viewers, one could set up a hierarchy, allowing multiples of X viewers to be reached easily by setting up X servers behind a first origin server, allowing for X*X viewers to connect in total. This approach could be repeated, allowing for any arbitrary number of viewers in the end. The amount of servers will however grow, depending on the size of X (which will be tied into the size of the instance, and thus the operating cost).

With WebRTC being focussed on low latency video delivery, it does come with some (dis)advantages. For one, the protocol comes with built-in handling of lost packets (WebRTC usually defaults to UDP). When the network connection to a client deteriorates, packets will be dropped automatically and when the next frame is rendered, the latency remains low. While good for some use cases (for example a conference call where a lost frame matters less), this can be undesired for other use cases such as premium sports delivery. Thanks to the stateful connection, a server-peer could however pick up on data being lost, and scale down the video quality in a server-side ABR kind of way. Similarly, the stateful connection could allow for heavy personalisation of the feed, not just for network efficiency, but also for cases like SSAI. While WebRTC does not provide this out of the box, it is an interesting option. All of those things do require even more compute on the edge, which in turn will reduce the amount of clients a single server can handle.

How do we really compare WebRTC against HTTP based streaming?

When really comparing the two options, it often boils down to a handful of questions:

- What kind of latency do you need?

- How large is the audience going to be?

- How much are you willing to spend?

Answering the latency questions depends on which latency range are you targeting.

- "Latency is not important"

In this case, HTTP based streaming is likely the best choice. It will be the easiest and cheapest to set up. - "Latency should be below 8 seconds"

Still HTTP based streaming is the best choice. LL-HLS and LL-DASH are probably the best protocols for the job. Even with relatively standard configurations, the 4s mark is well within reach. - "Latency should be around 1 second"

Here the choices are more limited. While LL-HLS and LL-DASH can be tuned to handle this, they are not very well suited. Dipping below the 1s mark will also become practically impossible for them at scale. For the HTTP based protocols, HESP is an option as it can provide sub second latency at scale with standard CDNs, including strongly reduced time to first frame for stream startup and channel change, but the current downside here is that the number of vendors providing support is still relatively limited (although growing consistently). WebRTC remains a valid option here as well, especially when the audiences are small. It can even become the must-have if you need a deployment where every millisecond counts (but in that case, the audience likely is small anyway). - "Latency in the 200-300ms range"

In this case, you will likely need WebRTC. Protocols like HESP can get close, but it’s really pushing the limit here. While WebRTC will also have issues reaching this if the network is not optimal, this impact will also be there for other protocols. It will come with some disadvantages if you want to scale WebRTC for these kinds of ranges, with higher cost compared to WebRTC at higher latencies, an increase in some QoE metrics (such as time to first frame and perceptual quality) and a lack of ABR. If hitting this latency target is a must however (although it’s not for common cases), there is little alternative available.

Looking at the audience size, there is a clear victory for HTTP based protocols when reaching large audiences. While WebRTC “CDNs” do exist, the cost is often extremely high. Looking at popular events and streaming services, HTTP based delivery is still the way to go. (I would be VERY interested if someone ever runs a Superbowl style event on WebRTC, but personally I don’t see how the economics would work.) A new trend I do see is the hybrid model: for a small audience, WebRTC is used and for a larger distribution, there is a switch to a slightly higher latency and standard HTTP based protocols. This is for example the case to have a handful of people which are actively placing bets or are bidding in an auction at a low latency where those who are simply watching don’t suffer from being one or two seconds behind.

Cost-wise, there is relatively little information to compare: most services which allow you to stream over WebRTC don’t share public numbers and when these are shared, there is quite a big difference based on the committed volumes. One thing which is very clear however, is that the cost is often a multiple of the delivery cost of a traditional CDN. As delivery is one of the highest running costs for most large streaming services, this is not something which should be discarded lightly. Same as with audience size (audience size often means cost anyway), the hybrid approach can make sense from a cost perspective. If you are however looking to keep costs in check, HTTP based streaming will be the way to go here.

What we recommend.

My personal recommendation is probably somewhat coloured. What I do believe in, is that things should be used for what they are built: abusing a specific technology for something it hasn’t been built for often causes issues down the road.

For most cases I would recommend the usage of HTTP based protocols. The reasons are simple: it scales at a reasonable cost and the most common latency target can be reached. The latency target most streaming services have, is not in the hundreds of milliseconds range (and even WebRTC can have difficulty here if the network isn’t ideal). The result is that HTTP streaming will cover the largest part of the market at ease. I am a firm believer that HTTP based protocols such as HESP can provide the answer to go even below the latencies of LL-HLS and LL-DASH, and solve a lot of issues not even solved by WebRTC such as channel change speed and network independence. (Do note I work for THEO which is a strong supporter of HESP, hence the color of my recommendation here.)

This doesn’t mean WebRTC isn’t without cases where it shines. If a really low latency is needed, it is ideally suited to solve the issue, especially when the audience is small, or a hybrid approach is used where a small number of users is served using WebRTC and the larger audience is served with HTTP based streaming.

Do you have any questions left unanswered? Things you agree/disagree with? Feel free to reach out to us, we’re happy to discuss your use case and see which approach would fit best!

Want to talk to us about what is the best solution for you? Contact our THEO experts.

Share this

- THEOplayer (45)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- SDK (19)

- THEO Technologies (19)

- THEOlive (17)

- best video player (17)

- html5 player (16)

- LL-HLS (15)

- cross-platform (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- client-side ad insertion (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native SDK (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad insertion (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- React Native (1)

- Real-time (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)