ROUNDTABLE DISCUSSION WITH THEO, WOWZA AND FASTLY

IS THE INDUSTRY READY FOR LL-HLS?

Watch the recording of the roundtable discussion between industry experts on LL-HLS

Dive into the discussion with THEO’s CTO Pieter-Jan Speelmans with Chris Buckley, Senior Sales Engineer at Fastly and Jamie Sherry, the Senior Product Manager at Wowza. Hosted by Alison Kolodny, Senior Product Manager of Media Services at Frame.io, the panel looked at the real world implementation of Apple's newly released LL-HLS specification, as well as the benefits and potential pitfalls.

- Implementing LL-HLS in the real world: impacts, opportunities and challenges.

- LL-HLS & Packaging, Digital Rights Management, Subtitling, and Server Side Ad Insertion.

- Improvements in scalability.

- Existing gaps in LL-HLS, LL-DASH or other streaming protocols that still need to be resolved.

- Status of LL-HLS: Today and What's to Come.

Webinar transcript

Alison: Alright, welcome everyone. Thank you for coming. I am Allison Kaladny. I'm a product manager of our media services at Frame.io, a cloud-based video collaboration platform and also a member of Women in Streaming Media. Previously I worked on multi platform media playback solutions at JW player and launched cloud video platform at Frankly Media. In today's roundtable discussion, we're going to talk about the real-world implementations of Apple's LL-HLS specification, as well as the benefits and potential pitfalls. And then we're going to end with some Q&A. You can use the chat window to send questions throughout the discussion. We'll get back to them at the end. And then I will pass it over to Pieter-Jan.

Pieter-Jan: Yes, thank you. So indeed, we have an interesting panel with the people from Wowza, of course, working on the packager side of this, people from Fastly helping on the CDN side to make sure that everything gets delivered at low latency. And of course, we from THEO, or at least myself from THEO, where we have, of course, implemented a video player to work with low latency HLS. Of course, we've gone through a lot of those things on how low latency HLS works in the past in our blog, in our webinars. So very excited to talk with you all and discuss the real-world problems on low latency HLS.

Chris: Hi, my name is Chris Buckley. I'm a Senior Sales Engineer here at Fastly. I focus on media and entertainment. So, I work with some of our larger publishing and more importantly, video customers. I've been working in the industry for about 20 years on internet infrastructure stuff related to web and video. I'm just happy to be here today.

Jamie: Hi, my name is Jamie Sherry. I'm a Senior Product Manager here at Wowza. I've been here just about eight years and have focused on our media server products, our cloud service product on live streaming and currently looking at some new initiatives around monitoring analytics and worked on client technologies as well. companies worked at level three on the CDN there and worked at Akamai on the CDN there and then startups before that.

Alison: Great, thank you. So, let's get started. This first question can go to anyone here: so, we've seen this call for reducing latency in the industry, but what is the real gain for companies distributing content? Why should they adopt low latency HLS?

Jamie: I will go. I think the real gain for distributing content is the enablement of new business cases that require low latency. We see a lot of the benefits of having HLS in the industry with broad adoption on devices. But some of the things we hear are instantly, how can I get that latency reduced? So, I can do this, I can do that. Specific to LL-HLS, the benefits are around again those features, the reach, as I said, and the scale that HLS can provide, CDNs like Fastly and others. And that's an advantage over proprietary options.

But the key component is still that latency being sufficient. We have a lot of alternatives that are cropping up in the industry, some proprietary, again, some around standards that look at lower latency as an offering. And it's really about that whole kind of package I call it. If you get the scale, the quality, the latency, which are all dials that can affect each other and then you get the functionality, that's really what matters, I think.

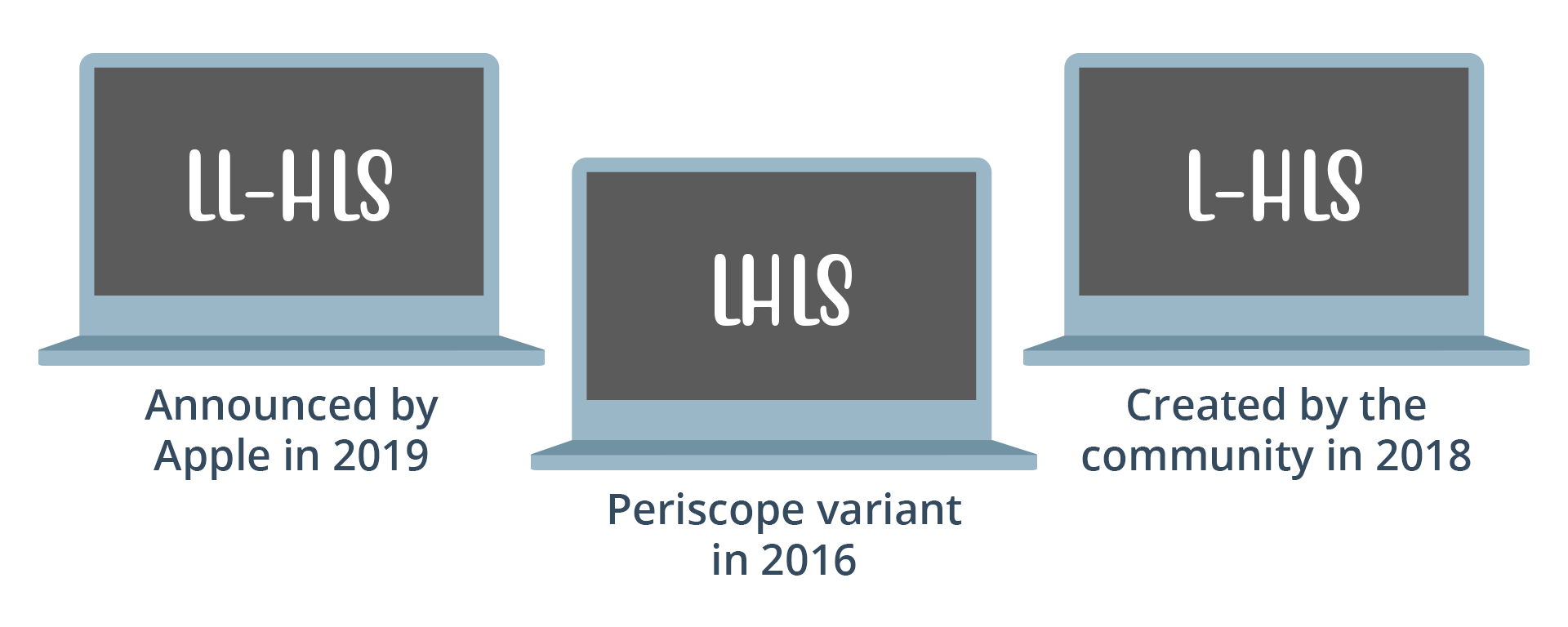

Pieter-Jan: Yeah, I definitely agree on it. There's a lot of low latency protocols out there. Low latency DASH has been out there a while as well. I think that the real power of low latency HLS initially will definitely be the platform reach, as you mentioned. There's a lot of iPhones out there. Apple has a massive ecosystem. And it does make sense for quite a lot of people, if you want to do low latency, I mean, if you want to do streaming in general, you'll have to go through that ecosystem. So, for low latency, having that large of a footprint from day one, basically, that's something that really matters. Reducing latency is one thing, but if you can only do it on certain platforms, it's not that interesting. But with low latency HLS, you actually have a pretty good shot at getting low latency down. With a big footprint initially and in the future, my expectation is with a pretty solid footprint across all devices.

Chris: Yeah, I have to agree both with Peter-Jan and Jamie here. I think the widespread adoption, when Apple says that they're going to do something, everybody gets on board. Initially, it's going to be all of the Apple devices. But I can see further down the track where this might become the new de facto standard of HTTP-based low latency, as HLS has sort of been for a lot of companies for a long time. I think also in the current atmosphere that we live in, the landscape, the global pandemic, we've got more and more and more things which seem to be semi-permanent to permanently being in a virtualized or distanced frame. So being able to bring some sort of standardized low latency delivery to events, particularly stuff that requires some sort of interactivity, I think is going to be very important. And I think it's going to set the standard for at least the next couple of years.

Alison: Yeah, that makes a lot of sense. And then so with that, it obviously has to be implemented, low latency HLS isn't just going to be implemented overnight. What is the impact of this on your side of your business? I guess I'll start with Chris this time.

Chris: You know, so for us, it's just been a lot of testing, right? So, there were some parts which we weren't so excited about, you know, H2 push, which I think we might get into a little bit later. But, you know, for us was really about how can we, looking at the spec and looking at things like the increased amount of requests with things like segment parts and all of these sort of blocking technologies that they've added into it - how does a CDN fit into that? How do we best help to reduce requests going back to origin? Because you don't want to have to build like four times the encoding farm just to support something like low latency HLS, right? That just doesn't make financial sense to a lot of people. And also, with emerging sort of more edge compute technologies, for us, we've been taking a big look at sort of where some parts of the stack might fit, and also finding good partners that we can do testing with and provide a solution. Because there are a lot of people coming to us saying, look, we want to do low latency, we don't really have much of an idea where to get started, who to trust, where people are on the spec. So, working with people like Wowza, working with people like THEO, just to sort of all get in the game together and work on this thing together has been super important for us.

Pieter-Jan: Yeah, and I agree that the blocking playlists and blocking other requests, definitely from a player side also brought some new opportunities actually. Doing parts, getting them downloaded, that's not really the big challenge from a player side at least to implement low latency HLS. Bigger challenges were around things like getting the preload hints right, doing the blocking playlists requests right. But also keeping in mind that for backwards compatibility purposes, low latency HLS doesn't always require those features to be available. That's definitely something from a player perspective, which can cause some headaches, as well as, of course, all the obvious other low latency challenges that you face from a player side, such as getting adaptive bitrate switching right, for which Apple did add some things to the specs, such as the rendition reports, which greatly help. I mean, it's significantly easier compared to low latency DASH to do ABR well in low latency HLS. But if you look at it, it's still a different pipeline. There's still going to be quite a lot of different behaviour when you compare the low latency versus the not low latency part. So, it was a pretty big change that we had to do on the player side. But I think it's definitely an improvement for the better as well.

Jamie: Yeah, just to add on to both what Pieter-Jan and Chris said about the working together, I think that's been a key thing here, right? The streaming pipeline or workflow, whatever you want to call it, right? Requires work to be done on the player. And at some levels, the CDN, and then at other levels, the Packager and Origin space where we play a role at Wowza. And really, you know, we've been doing this for a while. In other words, working on the Apple spec. You know, we're all waiting for the Apple spec to get out there now and for a while it was a bit fluid and kind of changed a bit here and there. Keeping up with that is challenging, but working together we've had a lot of good feedback back and forth I think to help each other out with getting this right. So that by the time we get out with our customers and Apple is ready, when everything lines up, that we're all ready to go. So, I think that's been a good part of this. We've seen it again; latency is a key thing in our business and especially with live streaming being a key part of our business. And so, this was important from day one and being ready when everything lines up is key. And again, working with everyone has been really helpful.

Alison: Yeah. And you've mentioned how the specifications have evolved over time. And with that the first draft of the low latency HLS spec had HTTP to push, which caused a bit of a small uproar in the industry due to scalability, but more recently, at a later point, the draft was updated to leverage preload hint instead. What was the impact of this change? I would say, Chris, maybe you also mentioned the HTTP2 push.

Chris: Yeah, I'll take a first crack at that. So, I think one of the big things with HTTP2 was the fact that a lot of different CDNs, Fastly included, had different levels of support for H2 in general. Some didn't have any support at all. Some had H2 offload, maybe some have H2 origin. But more importantly, their implementations of H2 Push varied a bit too. So, some like us, we supported H2 Push using headers coming from the origin, or in this case, the packager. Some other CDNs, they relied on meta tags in HTML, which doesn't really work for a video-based workflow. And again, some of them didn't support H2 Push at all. So just the splintering of support in the large-scale delivery side of things cause a massive problem just for our industry, but also things like ad insertion, those kinds of things, which I think packages and players might be able to speak a little bit more to.

For us, with moving to the preload hints, it's great because one of the requirements is with the preload hints and the blocking updates, You can have a lot of TCP connections running to the packager at any one time waiting for this next part to come with the preload here and being able to coalesce or collapse all those requests to a single to origin, which is something that we can do. It really takes a lot of burden and a lot of loads of the origin. So, for us and also being able to help customers, I think it was a really great move.

Alison: And then, from the playback perspective, Pieter, if you could speak to that.

Pieter-Jan: Yeah, well, for us, it was actually initially a little bit of a painful change. We had started implementation of low latency HLS way before the final version of the specification. So, we actually started implementation while H2 push was still in the draft. Once that was changed, of course, we had to throw things around. We had to start from scratch again or at least back to the drawing board.

But it's indeed, it's as Chris mentioned, preload hints are actually a nice change. It made measuring bandwidth, those kinds of things a lot easier from a client perspective. But also, it's, as Chris mentioned, the server-side ad insertion part, those kinds of things just all become a lot easier with preload hints. There are still some challenges there. It's not all happy thoughts and sunshine, unfortunately, there are still some pretty complex scenarios that you can build with preload hints, such as, well, the approach that I would actually take if I would be a publisher, taking preload hints and supporting them through byte ranges, delivering them through byte ranges. Definitely a very interesting approach because it gives a lot of compatibility with DASH and potentially other protocols as well.

From a player's side, it did provide some challenges, but in the end, it was quite easy to support if you look at the bigger picture of LL-HLS in general.

Alison: Okay. And then, so kind of going to that big picture. So, this technology is fairly recent. Devices capable of playing LL-HLS are still a little scarce. Are there any other notable limitations companies implementing LL-HLS should take into account? Jamie?

Jamie: Sure. I think in some of the early testing, we've seen this with mutual customers, or testing with THEO even, directly. I believe customers are just going to have a notion that LL-HLS is just going to be another, as it's part of the spec, just another thing that just kind of works, right? And they're going to expect that to work with all the current functionality they use that goes along with the delivery. And that lends itself again to what I said earlier, where they may not realize that there's actually development efforts in a significant way across the streaming pipeline or workflow with the player and the CDN and everyone else that's involved.

And so, when they want features on top of the delivery like timed metadata or security options, the list goes on and on, subtitles, captions, as we started to talk about, and ads, all those pieces. They're going to come probably in a bit of a staggered way, because LL-HLS is slightly different, it's going to perhaps not all be there right day one and probably will require obviously some changes to how they do stuff with current HLS implementations they have.

So, I think the other part of it to think about is the platform support we talked about. Chris, I think you said it, right? Platform support will hopefully take off if this takes off, right? That includes, you start obviously immediately with HTML5 in the browser and iOS, of course, and then it grows from there to things like Android, which I know there are various entities, I think, you've got some stuff lined up for SDKs for OTT and Android and so forth, but you have the Rokus and the other folks that we're talking to already who are customers, like I need this to work on all my platforms, like I have HLS, right? Those challenges don't necessarily come day one. We hear a lot of variety actually from conversations in the ecosystem that some are urgent to have this out, they want when Apple's ready and everyone else is ready and some are like, we want to kind of gauge our interest with the market and when we hit critical mass, then we'll be ready and that kind of thing. So different approaches with timing, I think are going to be there and that are happening.

I curious to thought too, and Peter, you guys are right there, I don't know if there's thoughts around device performance too, especially on mobile too, if there's any implications there. Maybe not, but you know, things like this crop up.

Pieter-Jan: Devices are indeed an interesting one. On mobile devices, I don't foresee too big of an issue, to be honest. It's indeed the case, Apple has a pretty good player out of the box. Apple gives limited flexibility in some cases but of course, it's sufficient for most use cases. Androids, to be honest, I don't expect too many other platforms to get native support for low latency HLS. If you look at the native support, usually it stopped around version three or four. So that's something, of course we're betting on that. We're delivering that to our customers. I don't see big issues on the mobile devices. The biggest issue that I see at this point in time is the devices where native support is basically the way to go. What am I thinking about? Very old smart TVs, some locked devices like Roku devices, although their HLS support usually is pretty good. It's usually those devices that give an issue when it comes to new technology, similar to how it was for low latency DASH, where native implementations are almost non-existent.

A bigger issue that I still see, and you've touched on that as well is things like subtitles, DRM, even server-side ad insertion. I mean, there are topics that have been touched upon even by Apple, but if you look, for example, all Apple reference streams have subtitles in them except for that low latency HLS reference stream, which I found quite interesting. And even when you ask them about it, they don't have a good answer why it's not there. So that's definitely a set of capabilities that customers will need. I mean, let's be realistic. If you look out to the landscape of streaming providers, most of them, either need subtitles or multiple audio tracks or, as you mentioned, DRM or server-side ad insertion. I mean, there's barely any cases out there that don't have one of those features enabled.

Getting those implemented on the packager side, but also on the player side, that's usually the tricky thing. We've seen it with other low latency protocols. It's all possible, it's all doable, but I'm afraid that it will take some time still in the ecosystem to get everything up and running and to get everything compatible with each other. Because those are things that often in the spec, but they're not in the spec. There are standards around them, but it's not like every fine detail is defined. There's a lot of different configurations out there. And those are cases to keep in mind. Those are cases to talk to your vendors about, see what they recommend, see what they've tested together. I mean, we've done a lot of testing already between the three of us, but there's always more work to be done. There will always be cases from customers that we haven't checked yet. Because every case is unique in the end. So, it will take a while before all of those unique cases have been covered. That's at least, well, on one side, the fear and on the other side, the challenge that I see. It's always fun to do, but it will take a while, I'm afraid, to get, to really get it as an out-of-the-box solution.

Alison: So yeah, you've touched upon a lot of those sensitive areas that need to be like flushed out more. But one of them being DRM. We, especially now, a lot more streaming's going on. We want to have low latency, and but also a lot of that high quality content requires some sort of protection or content security. I don't know, Jamie, if you have any additional input on how you think LHLS is impacting security.

Jamie: Sure, yeah. I mean for DRM specifically; the supported DRM technologies will be the same. That's not a big deal, but I'm going to play off something I think Pieter-Jan, you're quoted as saying, you know, talking about key rotation, right, for content decryption and that means getting a license. A lot of these features will come down to timing. I know, I think we'll talk more about some of these other features in this conversation and timing with the notion of what LL-HLS means as far as more requests and smaller timeframes for the partial segments and so forth, right? The timing crunch becomes even more critical in terms of alignment of making sure these features work at the delivery. And so key rotation is one of those examples.

Real quickly, I'd say, just to touch on other security, I know, Chris, you'll probably have some thoughts on this too, but in talking about token authentication, geo policies would probably be okay, but other security rules that affect not so much the content encryption or real delivery of the content, if you're protecting renditions at lower levels, that gets more complicated to make sure that tokens are appropriately applied, things like that. Encryption, probably less of a concern, but curious. Chris, what do you have to say about the inside?

Chris: Yeah, so on the delivery side, we don't really have much skin in the game in regard to specific things like DRM. That is more a content manipulation, content validation kind of side. So, players and packages really are more involved in that. I'm really excited that TLS 1.3 is recommended as part of the spec. You've got more secure ciphers for delivering the content over an insecure internet and also potentially reducing some round trips to further reduce the latency of the delivered product.

Token authentication is something that most CDNs, most Edge delivery can implement on the Edge. I don't see that really changing with low latency HLS. I don't see the way that token authentication will be done really needs to change. I guess you could potentially have shorter token times or something like that.

But another thing I've been thinking about recently is stuff like forensic watermarking. A lot of the forensic watermarking companies sort of doing stuff either on the origin side or in a collection of origin and Edge. And seeing how that might play with low latency, just seeing as you have such little different parts all of the time, how best to kind of tackle that problem. That's going to be an interesting one for the security side moving forward, I think.

Alison: Yeah, I would agree with that. And then so I guess going, one of the main reasons we have a lot of this content security is also to help with monetizing the content. And everyone here has kind of briefly touched upon server-side ad insertion has become a very big, very prevalent method of monetization. Is it becoming easier in any way because of LL-HLS or is it harder? If you want to elaborate on that a bit, Chris?

Chris: So, for monetization and server-side ad insertion, it's an interesting one from a delivery perspective because, in traditional CDN delivery, we're picking up a playlist and we're just sending it down to the end user. So, we're not really doing anything with the content.

However, in the last couple of years, we've had an explosion of Edge compute capabilities where people are taking some of the functionality that they'd run in their AWS, that they'd run in their data center or GCP, whichever cloud they're using. And they're starting to move more and more on that to the Edge. So, I think that there is a capability there for delivering ads in a more low latency fashion by being able to push server-side ad insertion into Edge-side ad insertion, basically, where those decisions are being made much closer to the end user. And that could potentially either do some more hyper localization. or just improve performance or personalization in some way. So, that's not necessarily specific to low latency, I think that's more just where Edge computer's going in general.

Jamie: Yeah, I think I'm happy to have the Edge play a greater role. I think there's advantages too, as you mentioned, with personalization and individualization. I think it's too early to tell whether server-side ads get easier, probably doesn't, with low latency HLS.

What's interesting, I never just reflect on it, is Roger's (from Apple) got a video out there talking about it and love the visualizations, but certainly I think it's an oversimplification of how you would go about doing it. What's the appetite? It certainly gets people going and thinking they can do it. Ads even on the client side, as we know historically, just there are too many different ways people want to do things and can do things, even with a lot of the standardization that's happened with bodies like the IAB and VAST and all these standard technology approaches over time. It's still just a big mess. Big fan of server-side, but I think time will tell on this one, and it's going to require a bunch of people diving in, really trying to do it, and teasing out where the trouble lies.

I think the one side thing I'd note here is that we've been talking about advertising as monetization piece. I think the latency in itself, and I think we'll talk about that later, but you know, or can talk about it later, lends itself to other monetization schemes too, more so perhaps than advertising. Certainly, there's a lot of content out there that they want to advertise, do low latency on and monetize with ads, but you have pay models that I think are equally applicable here, and in some cases, more applicable as interactivity grows. So just throwing that out there.

Alison: Yeah, building off of what you just mentioned. So, with SSAI, it often requires a lot of metadata to be delivered to the client. And I can imagine a lot of companies would want to leverage similar mechanisms to deliver low latency interactive experiences, like you mentioned. How friendly is LL-HLS towards metadata or even subtitles?

Pieter-Jan: From a client perspective, well, it's similar to what I mentioned earlier. It's going to take a while, I think, before we really see it implemented across the board properly, but if you look at it, it's actually not that complex. The same metadata and the same subtitle schemas that you had for normal HLS are still there, you still have ID3 metadata, you still have date range, you even have program date type, which is kind of mandatory now for low latency HLS, which can actually increase, well, the ease of use for adding metadata to your low latency HLS streams. Simply put, it gives you an absolute timestamp, which of course is very convenient if you want to synchronize external metadata with your video.

On the other hand, I am still a little bit sceptic about it at this point in time. The amount of implementations to insert metadata and even to insert subtitles is still fairly low. Also, because even though the standards still apply, you can still use the same aspects of inserting metadata, but also subtitles in the streams as with normal HLS. What we've seen, and we've seen it especially also with low latency DASH, it basically had a similar issue.

What will you do if you have a subtitle if you have a certain caption you want to display? If you're going low latency, then you will have very, very short segments or very short parts in the case of low latency HLS. But if you have a part of, for example, one second such as Apple recommends it, any subtitle under normal circumstances will be on the screen or should be on the screen for a longer amount of time so people can actually read it. So, the question you get then is, should you duplicate it and send out the same caption multiple times, saying that it should be shown from four seconds to five seconds, and then again, from five seconds to six seconds? Or will you distribute it once and say that it will be shown from four seconds to six seconds, but then potentially one part later, you have to reverse on that because, well, your presenter threw in a big, fat slur in there, and all of a sudden you should have shown some other captions on the screen.

And that's, I think going to be a big challenge still. I've talked with a lot of people on this. Nobody seems to have a consensus on what is the best approach to use. The purists will say that if you repeat it, then you get too much overhead and data. Other people will claim that - , if you only send it once, but you pick it into the stream one second later, you won't have a caption. All arguments are valid, of course. It's going to be a matter of getting a consensus within the industry, I think, on how we should best do it. And of course, us from a player side will try to implement them all, but it's going to be interesting what it will be in the end. But for interactivity as well, the metadata, the subtitles, it's going to be an interesting question, I think.

Jamie: I think with some of these too, that fragmented approach, it'll be interesting to get people in there and then come up with creative ways to figure out how to solve these problems, right? And then different methods that might rise up. But I think back to the days with advertising, with Flashware, there's no consistency, right? And then you had standards build up. I worry, you said you'll implement them all, but you may not like having to support all those over time. But coming up with consistency, I really would emphasize what you said, which is hopefully that the ecosystem will gravitate towards consistent ways to do these things, or consistent way. And so that'll be interesting to see what happens. And again, like these others, it'll take time to see how this plays out.

The one other thing I'd touch on too is just with the notion of CMAF, there's the addition of eMessage, right? Where that's certainly with a format for DASH already, LL-Dash, but you end up with yet another way to look at this. And I think that might matter depending on people wanting backwards compatibility and other reasons for doing it in a combined way. So, it's interesting to see.

Alison: Yeah, for sure. And then, you know, there are lots of business cases for low latency HLS. There's the classic, you know, you think of sporting events. But what other business cases do you think will benefit from the advantages? Are there these interactive quizzes or other forms of interactivity? Is there betting? What are your thoughts? I guess I'll start with Chris.

Chris: Yeah, cool. So I think interactivity is really key. I touched on before, just because of more and more virtual events, people are really struggling to find compelling experiences with this sort of virtual world that we've been living in. So, with low latency HLS aiming to be two, maybe three seconds behind live. I don't think it's a great case for something like online betting, online auctions, stuff where money is being exchanged, I'm not sure that that's the best use case for it. But either one to many or one to few interactive experiences, I think, could be really great. Also, potentially learning those kind of things where an HCD-based unicast solution works fine, I think there could be some good use cases there.

Pieter-Jan: Yeah, if you really want to go super low latency, like the money kind of cases it's a fit and it's not a fit. I think I do agree that having two or three seconds or well even four or five like you usually see of latency with low latency HLS. That's a lot. On the other hand, we're coming here from 40,30 seconds that kind of ballpark. So, it's definitely a very big improvement. I don't think all use cases will benefit from it just yet. Betting, I do think that they will make use of it simply because the other approaches that they have today either don't exist or they're like WebRTC based or something similar, which doesn't scale that well. So, I think it's definitely a step in the right direction. I also do expect a lot more interactive quizzes, I see a lot of potential as well for interactive television style formats. But well, that's like a chicken and egg kind of problem. I think would be interesting to see what creative people can come up with. I know I'm not that creative usually, but I can already imagine a number of them. So would be interesting to see.

But even for those cases where the latency isn't just low enough yet, I do think that they can make a good step in the right direction already. Also, for the more general OTT distribution cases, where I'm looking more at the telco kind of distribution as well, they want to shift away from their classical methods more into the OTT space, but latency was always a problem. I do think that that's going to be pretty big step forward for them as well. I mean, they can still use broadcast style encoders with some low latency HLS. I do think that that's going to be a pretty massive shift as well.

Jamie: Yeah, so totally agree with you, Chris. And just to touch on what you said, Pieter-Jan about creativity, we get this all the time. It's been a historic thing for us is to learn what customers have tried to use our products and services for that we never imagined. And I think it's a great thing to see. And we're seeing that with low latency too. So, to you guys' point of the right numbers and the right use cases, people are going to try anyway, and they're going to push that envelope and they're going to insist within reason that they try to squeeze that out as much as they can.

I think that's one thing we continue to see and I kind of look at it as, I hate to bring it up, but in reality, for all of us, it's been a big change. So pre-COVID, post-COVID, we see a lot of change in terms of growth in market segments that were kind of up and coming, but this kind of just escalated them, right? So, you feel like a telehealth or medicine. A lot more virtual events, obviously, right? We're all safer at home, and so, we're doing stuff at home. And other pieces like that. I guess I would just so we'll see new ones.

To your points about betting and bidding and auctions and all that, we see people trying, whether it works or not is one thing, right? And you know, some people have even hate to say it to go back in time is had satisfactory auctions on RTMP. So, it's interesting to see what does work. Then they gravitate towards WebRTC, and then they find that the scale, like you said, is not there, and the quality cannot be there as well, in some cases for that. And it's super complex still to implement and deploy and so forth. And then, of course, WebRTC doesn't work with our friends at Fastly per se, right? And so that's interesting.

The last thing I would throw out is still latency and synchronizing. You mentioned the interactive TV and second screen, whatever we want to call it, that's what it used to be called at least, sometimes synchronizing is okay and the latency can be higher. So, these numbers that are, if Apple is doing, if LL-HLS is 5-6 seconds, in some cases, that is okay if people can find creative ways through, time metadata or other pieces to actually synchronize the experience to a greater capacity, not maybe down to the frame of video, but if they can get it down to some acceptable level though, they'll choose that, and this technology would work. So, it'll be interesting to see what else comes up.

Alison: Yeah. There are all these different protocols to try to reduce latency. LL-HLS seems to be providing a pretty good solution for most of these cases. And then low latency DASH was also doing the same, but from the MPEG-DASH side. Are there any problems left to solve when it comes to these protocols? And are there other changes we should keep an eye out for? Maybe even other low latency protocols which reduce the latency further. Peter-Jan, if you have a perspective.

Pieter-Jan: I do have some perspectives on that, indeed. I think Jamie already mentioned it, there are still cases where you would need a lower latency, where protocols like WebRTC don't scale that well. But I think every protocol kind of has its place at this point in time. There are still a number of use cases which do require both massive scale and ultra-low latency. There are, of course, protocols that can bring you that. But I'm not going to discuss the one that I have in mind, because it's one that we developed originally. So, I'll not mention that.

But there are other approaches and other problems still as well. One of the bigger problems that I still see is that low latency isn't the complete solution for the end user experience. There are still challenges in ABR, there are still challenges in fast channel change, those kinds of things. There's still a whole spectrum of other challenges that are out there. I don't think that the evolution of the streaming protocols will ever stop. All of those cases that we've already mentioned: SSAI, DRM, subtitles, metadata, they all have areas which can be improved further. And I think that's going to be key in this industry. It's been, it's been key in this industry over the past years. It just keeps evolving. And that's something that I do expect that will happen in the future still as well. It's going to be interesting to see where that goes too.

Chris: Yeah, in terms of delivery and scaling, this kind of stuff, I think one of the big problems that I saw has already been solved in the revision of the specification with allowing transfer encoding to be used for delivery of the segment parts using fragmented MP4. So, one of the problems that I was seeing with that is that people would have to have two different encoding and transcoding and packaging workflows in order to be able to support the same media.

So being able to have low-latency DASH and low-latency HLS being able to take advantage of chunk transfer encoding and fragmented MP4, I think, is a huge win for us. That means that we don't have duplicate content on the network. That's a huge win for the packager and the transcoding side because they can have one workflow, at least for doing the video parts, and just have a playlist or a manifest depending on their needs. So that was a big win for me.

One of the challenges or one of the gaps that I see in LL-HLS specifically is the amount of updates to objects on the network. So, for example, If you're not going with chunk transfer encoding, then you're looking at publishing unique segment parts every couple of hundred milliseconds. I don't know that there are many CDNs out there that have ever really thought about doing sub-second caching, let alone actually implementing it. So LL-HLS has caused a lot of the industry to really take a look into their C, or their Go, to see, are we actually honouring these things? Can we honour these things? So that's a little bit of a problem that I see.

But with, you know, Apple thought about it a little bit with things like request blocking and having some parts of the spec requiring request coalescing at Fastly we call it request collapsing but basically being able to take all the global requests and collapse it down. So, it puts a lot of the workload on managing that stuff on the Edge. And, you know, hopefully we can really take a lot of load off the origins in a bunch of different implementations and people using different features with the spec in order to help keep it scalable and low latency.

Yeah, and as Pieter said, there are other low latency protocols out there. For example, there's HESP, which firstly, we just completed a POC with THEO. I haven't personally used it much. And I know that our proof of concept went well with that. So, there are other options out there for people who want options or have specific use cases or don't want to have to refresh their entire stack in order to be able to support the latest cool stuff.

Alison: Great, thanks. Well, I think then we kind of want to know what's next for low latency HLS. What do you all have planned?

Chris: I guess I can go first on that. So, look, again, it's just more and more testing. We've done testing with low latency HLS, but stuff like - how do we work with the providers on time metadata, with our new computed Edge product, being able to run more complex code closer to the end users, maybe there are parts of the spec which are better served from the Edge. Maybe Delta updates are good from the Edge. Maybe the blocking playlist reloads are best held at the Edge rather than being sent back to origin.

Potentially, there would be a way for lack of a better term, a dumb traditional HLS package to just keep doing what it's doing and leverage Edge technologies to jam low latency into it. These are all just ideas that we've been playing with. But I think there is some potential for separation of duties with the spec. And we'll just have to see which works, which doesn't, and who best is served by it. So that's what we're working on.

Jamie: So, our immediate focus is on our media server product while the streaming engine. And as I said, we've been developing it for a while and had public releases out with it. We just released another beta version that has a bunch of enhancements and bug fixes in there. And by the end of the month, I believe it's next week now, we'll have a patch version out that will have those changes and more as well. And we'll just continue to evolve that to head it towards our next public release of that product, working together with Fastly and THEO on this as well, to make sure the compatibility is there.

We also have a live streaming service that uses Fastly as a CDN, and we're promoting people to use THEOplayer more and more with that service, and we are also working on initiatives to support LL-HLS in that product as well. And that'll come out later in Q4.

Pieter-Jan: Yeah, and from our side, we actually have our first player with the low latency HLS pipeline out there. There's still a lot of things that we're testing for compatibility, expanding our footprint, working on even some new cool algorithms to make it even more efficient, to make it harder, better, faster, stronger. All those kinds of things are on the planning as well. We also have, well, maybe this crazy vision, but based what Chris already said, I think that he has sort of a same thing that he's seeing on the horizon, where we're seeing that low latency HLS can actually take on a better and bigger footprint compared to just the Apple devices, potentially invade some of MPEG-DASH's territory and start delivering DRM with PlayReady, with Widevine, with all those kinds of things in there as well.

That's one side of it. On the other hand, of course, We all know that Apple loves to update the HLS specification. Next update, if you look at the expiration date of the current spec, is somewhere around November 1st. So, let's hope they don't have too big of an update. But we know a few of the things, of course, already that they will do like adding the score attribute and those kinds of things. But that's definitely something that we're also keeping our eye on, just to make sure that when something new comes out that we can deliver it and get it on the roadmap as soon as possible. Cause it's actually our belief that a lot of customers will soon want to start using it, iOS 14 came out about a week ago, so that's definitely something that we're seeing the markets pick up on as well. Now that low latency HLS is live on Apple devices, people want to start expanding towards the others as well. So yeah, that's definitely something that we're helping them with to expand to whatever platform that they want to go. And that's something that we'll, of course, keep on doing.

Alison: Great. Well, this has been a great discussion. We'll have our Q&A session. And we will also follow up with some materials after this webinar. But just wanted to say thank you all for being on the panel. Thanks for listening.

From Andrew:

“What is the value of the non-independent segment parts? There is no guarantee that they will start with an iframe, so a player should never attempt to begin playback from a non-independent part. With a realistic chunk rate of five frames, that's six updates per second per rendition, which is a lot of manifest updates. Is this amount of turn on manifest really necessary? Couldn't the desired behaviour of allowing the player to determine its own buffer requirements for fastest startup switching be achieved by only listing parts that are independent?”

I feel like this might be best for Pieter to answer.

Pieter-Jan: Yeah, certainly. There's actually something which is very interesting as well about the independent tag in the playlists. The independent tag doesn't even say that part will start with an iframe. it will actually say that it has to contain an iframe. So that's significantly different still from a player perspective. Because even if a part is marked as independent, you can't per se start playback at the beginning of that part. What it does do actually, it does give you some insights in what the structure of different segments is. That's something you don't have with low latency DASH. But in our implementation of the player, it did prove quite useful. It's indeed the case that you can't always switch at any point in time in the stream, even if you know a part, but what it does give you is it does allow you to switch more often than, for example, in segments.

We've seen quite a lot of implementations that have GOP-sizes which are smaller than the segment size. Apple also recommends a two-second GOP-size, with a six-second segment size. So that means that every other part you do get an independent part, which is helpful, at least if you also follow the one second per part kind of recommendation from Apple.

So, it is still interesting that internal top structure does make a lot of sense. Do all of the manifest updates make sense? Well, that's a different question. I do think that they make sense in cases where server-side add insertion. or similar capabilities with discontinuities are being used because then you can switch a lot faster because you know a lot faster when something will happen that will impact playback. But yeah, in let's say traditional cases where you're just playing a linear channel, it doesn't make that much sense if you would ask me. So yeah, I hope that that's a little bit of the answer. Unless if somebody has something to add.

Alison: All right, I'll move to the next question then:

“Without the PUSH feature, what are the advantages of using H2 instead of HTTP1 for low latency HLS?”

Chris: I can take that one. So yeah, it may seem that H2 is not so important now that they've relaxed the PUSH requirement. However, H2 kind of gives us some more benefits as well. I mean, the first is security, H2 requiring TLS as part of the spec. So, we immediately have an extra security requirement in delivering low latency, which I think is just good for the internet in general.

And secondly, you know, HTTP 1.1 can be expensive in the creation and destruction of TCP connections, and then we have things like keepalive, et cetera. But, you know, being able to do HTTP 2 multiplexing and just being able to chip the data, both the playlists and the segments, metadata, et cetera, all down to one TCP connection, I think is a good thing for latency in general. And also, being able to do stuff like TLS for things on the same host over the one TCP connection, again, it just reduces a little bit of overhead in the TCP world. So, I'm still a fan of it needing H2.

Alison: All right, so:

“Does the low latency HLS spec support alternate audio tracks embedded captions now?”

Pieter-Jan: I can take that one from a player side and potentially from a little bit broader side as well. Yes, absolutely. I mean, low latency HLS does support alternative audio tracks. There's actually no change in how alternative audio, captions, subtitles, DRM, even how most of those things work compared to, well, let's just say - high latency HLS or legacy HLS. The specification didn't have to be updated for those things.

The biggest problem you will have today with all of those use cases is, of course, finding a combination that supports it end to end. Low latency HLS is still fairly new. The first real production use cases or first real production player, which was Apple's player, well, only launched very recently. We have our beta player, or well, at this point, also our production player ready. But it's, of course, a story of getting it end to end, up and running. We've been testing with Wowza, we've been testing with Fastly. All of these are very interesting use cases. But I do expect that the broader industry will still take a while before we can really start deploying it in production as an out-of-the-box kind of solution.

Jamie: Yeah, and from a packaging perspective with Wowza, these features are supported today with HLS, and I don't see them changing with LL-HLS. So, should be good.

Alison: Great, this actually also goes segues well to the next question:

“What players can we use to play LL-HLS? Also, what browsers support it? We're not able to find a player or browser or a JavaScript player that supports LL-HLS?”

Jamie: Well, from my perspective, I heard only THEO supports it. I'm not sure if that's true. No, I'm kidding. Certainly, THEO supports it. First and foremost, we've been testing with them. And so, from a player perspective, I know others are working on this, we've talked to JW Player, for example. They're working on it with A plus JS too. And then JW and VideoJS has plans to do this. There's a question of timing and so forth with each one. I think everybody's trying to, in that waiting game or time perspective, trying to figure out the right and the best time to drop things in for customers.

And as far as browser support, it shouldn't change from what you do with HLS and JavaScript-based or HTML5, and MSC-based players today. It should be the same. But it's a matter of those player applications adding the additional support.

Pieter-Jan: Yeah, and it's probably indeed up to JavaScript based players. As I mentioned earlier, I don't really expect a lot of native implementations to happen, other than Apple's. Might be that platforms like Roku also implemented, but that's probably going to be a little bit of a stretch. I do expect that, I mean, as Jamie mentioned, more and more players will start to support it. The Apple ecosystem is not an ecosystem you can ignore easily. Once, of course, people start producing LL-HLS streams, I expect they will want to use it on other platforms, that's at least why we implemented LL-HLS in our player at this point, but I expect that others will do this relatively soon as well. It's a matter of timing, because I know that the HLS.js people are working on it today as well.

Alison: Okay, moving on:

“Any insights on the future of low latency streaming and QUIC?”

Chris: I can jump on this one. So, I believe the latest update to the spec did make a mention that they don't see why LL-HLS wouldn't work with QUIC or HTTP 3. One of the issues with being able to do testing of that is that QUIC still is in its final stages of drafts. And the final version of the specification for QUIC or HTTP 3 hasn't quite been ratified yet, which means that browsers all have some form of support, at least in Canary form, Chrome has got a lot of GQUIC stuff going on and they will probably transition to the standard HTTP3 as time goes on. But you know, most support is only in nightly builds or Canary versions of browsers and phones and whatever. So, I don't think we're really going to see that being merged for a little while. Because LL-HLS may come out with changes too. So, we've got two different standards going at different times at different paces. But we'll see. I'm hopeful.

Alison: Great! Another question:

“How is it possible to mutualise the caching of low latency DASH and low latency HLS video parts on CDN? Is it possible to have the same addresses on the CDN despite the difference of the manifest structure?”

Pieter-Jan: This is something that definitely is possible, we've been talking with a lot of people, and I know that this is more or less the holy grail and probably Chris has some insights on this as well.

The approach that we've seen so far that is the most interesting to take is to actually use chunk transfer encode basically for your preload hints to have all of the parts in one bigger segment and addressing them with byte ranges. That would be very similar to how you can actually do low latency DASH as well. But maybe Chris has some insights from the CDN side as well.

Chris: Peter, you are 100%. So, as I spoke before, one of the biggest problems that I saw with the spec was having to have two different workflows for low latency DASH and low latency HLS. Being able to address them both with chunk transfer encoding, byte range requests on fragments and MP4s is one of the biggest wins that I've seen apart from relaxing the H2 push requirement.

And as I said, this is great for CDNs too. We have a lot, but it's finite amount of disk and being able to have the same segments to deliver for customers is great for us. I also think it's great for the packagers and the transcoder folks and the customers using them, because then their cloud storage can be halved. There's a lot of positives for being able to merge this stuff together.

Alison: Okay, so another:

“Do we envision CDNs inspecting HLS manifests for preload hint tags and initiating a prefetch from origin before waiting for one of the streaming clients to make the request?”

Chris: So, this is a really interesting question because the preload hint is actually advertising partial segments that don't exist yet. So actually, doing a prefetch of a non-existent part is literally impossible due to time being linear and those kinds of things. But I do see with things like Edge Compute being able to, at least, make the pre-request to the origin and coalesce all of the requests globally. Because the chances are the player is also making a blocking playlist reload as well at the same time in that request. They get the playlist, they see the preload hint, they go for the preload hint, they're also making the blocking playlist reload. So, what you've got is- you've got two requests that are hanging on the origin that the origin has to hold a 'request blocking' is what they call it in the spec. So, these two requests will go back to the origin. And if the Edge, if the CDN does inspect and does make that request, they would probably also want to pre-empt the blocking playlist reload as well. And then you send both of those at the same time with the next preload hint.

So, I'm not sure that prefetching is the right way to say it. You may shape another couple of milliseconds off it. But I don't think doing a prefetch with CDN is going to give you a major win.

Alison: Great, okay. We're going to try to get one or two more questions in. And then we'll follow up with some that we weren't able to answer during this time. I'll start with this one:

“Essentially to summarize which protocols, formats, encoding combinations are supported over the whole chain encoder, Wowza, CDN, THEO?''

Jamie: I'll start. So, from a Wowza perspective, our origin, Wowza streaming engine software takes in many formats. So, from an encoding perspective and ingest perspective, you can do RTMP, RTSP, WebRTC, it's kind of universal. There's a lot of ways to bring a source in. From there, we will certainly do packaging. We can also do additional media processing in the sense of transcoding. And that includes Transrating. And then onto CDN, we're currently working with Fastly. We have plans to work with Akamai, any major CDN that our customers need will certainly support. And those may be in different ways. There's certainly a consideration for a different distribution into the CDN, push, pull, and so forth. And then onto THEO for playback. So really comes down to how you want to bring it in, lots of ways to bring that source in, and then we'll do the usual things and send it on its way through the CDN and out to THEO for playback.

Pieter-Jan: Yeah, and from our side, we will actually do for most of the for most of the formats, it's basically a pass through. So, if your device supports H.264, then you're basically using any device in the world, I think. But that will be supported if you want to do HEVC or AV1 or any kind of other, probably not Apple recommended kind of encoding, that's something that we can still do pass through as well. We did only implement using fragmented MP4 as segments at this point in time. We do have plans to support Transport Stream as well, but interestingly I haven't seen too many cases of people really wanting to do Transport Stream, but that's probably the biggest limitation that we have today. Other than that, it just depends on your device, and we will handle whatever needs to be handled.

Alison: Great. Let's get one more:

“Do you think Apple's low latency HLS will mean they stop working on CMAF low latency or Apple will continue supporting CMAF and low latency HLS?”

Pieter-Jan: I see there are no immediate takers, so I'll have a go. I don't think anybody can look into Apple's head, or at least the many brilliant heads which work at Apple. Doing low latency HLS is definitely one of the things that I think they wanted to do. They probably saw the issue in the market as well. But they're also still supporting CMAF, of course. And low latency HLS is perfectly compatible with CMAF low latency. So, I don't really see any urgent need for them to stop working on anything CMAF related. I would actually expect that they will continue to support CMAF as a whole as well. And we'll have to see what the future brings on that, or at least that's my insight on it or my assumption on it.

Chris: Yeah, I agree with Pieter. Apple spent a lot of time getting feedback in this new extension to the specification from the industry. Big round tables, big feedback sessions. And the two things, in the sessions that I was part of, is that people were really anti the H2 push. We saw that being relaxed and replaced with preload hints. And also, the question of - Why can't we use the CMAF chunk transfer encoding rather than having to do these partial segments?. And Apple have moved in that direction, too, in providing the ability to do that. So, I don't think that, well, CMAF in general is not going anywhere. I don't think that CMAF low latency is going anywhere. If people have their low latency DASH workflow, I don't see them just tearing it down and switching to LL-HLS as well. So, I think the future is kind of bright for both of them.

Jamie: Yeah, I agree with both of you. I mean, they've been hinting at with low latency HLS, references to CMAF in different ways, right? And I think they'll continue to do that. The reality is that Apple is Apple, and you don't know until you know sometimes with them and their sense of what they say and when. So, time will tell, but I agree with you, Chris. I don't think people are going to have to rip down what they've done or abandon it. Not going to happen.

Alison: All right, maybe one more question that we received earlier on. Don't want to ignore it. And then a few others that we'll try to send follow-ups on, as well as the recording. So, we have:

“Hi, thanks for the great discussion. I'm wondering what is the implications of using low latency HLS for end-to-end delivery from the origin into the player. As you have mentioned, the Edge, is it possible to use one of the real-time protocols like RTMP for video contribution and then repackage the content at the Edge in CMAF, LL-DASH and LL-HLS format, and then deliver it to the viewers. Any recommendations on both architectures?”

Jamie: I mean, I'll just echo what I said earlier with the other question about workflow. We certainly can take, and other services and products can do this too, to take in RTMP, repackage, do other media processing if you need to and go out in the sense of promoting DASH and HLS and CMAF format together. So, all possible. I don't know if Chris, you want to comment on anything?

Chris: Look, we don't really work on the ingest side so much. So, I don't really have much of an opinion about using things like RTMP. I think SRT is maybe the way forward with doing the low latency ingest side of stuff, as opposed to RTMP. But definitely, I would love a world where people are repackaging theirs into CMAF, and so using low latency DASH or low latency HLS, or both, depending on their needs. Because again, being able to collapse that, half the content needed to encode and store and package is a good thing for everybody, I think.

Alison: Okay, well, we are a little over time here. Thank you again everyone who has listened in. For anyone who wasn't able to attend, we will send out a recording. And till the next time!

Speakers

PIETER-JAN SPEELMANS

Founder & CTO at THEO Technologies

CHRIS BUCKLEY

Senior Sales Engineer, Fastly, Inc.

JAMIE SHERRY

Senior Product Manager, Wowza

ALISON KOLODNY

Senior Product Manager of Media Services, Frame.io

FASTLY, INC.

Fastly helps people stay better connected with the things they love. Expectations for online interactions are changing: consumers demand a fast, reliable, and secure internet experience. Fastly helps our customers exceed those expectations by creating great digital experiences quickly, securely, and reliably by processing, serving, and securing our customers’ applications as close to their end-users as possible, at the edge of the internet. This becomes more tangible with edge computing, which aims to move compute power and logic as close to the end-user as possible.

WOWZA MEDIA SYSTEMS

Wowza is the global leader in live streaming solutions. Our full-service platform powers reliable, secure, low-latency video delivery for companies worldwide. With more than a decade of experience working with 35,000+ organizations in industries ranging from media and entertainment to healthcare and surveillance, Wowza provides the performance and flexibility that today’s businesses require. We work with each customer to ensure their success in putting streaming to work for their business. Our promise is simple: If you can dream it, Wowza can stream it.

THEO TECHNOLOGIES

Founded in 2012, THEO is the go-to technology partner for media companies around the world. We aim to make streaming video better than broadcast by providing a portfolio of solutions, enabling for easy delivery of exceptional video experiences across any device or platform. Our multi-award winning THEO Universal Video Player Solution, has been trusted by hundreds of leading payTV and OTT service providers, broadcasters, and publishers worldwide. As the leader of Low Latency video delivery, THEO supports LL-HLS, LL-DASH and has invented High Efficiency Streaming Protocol (HESP) - allowing for sub-second latency streaming using low bandwidth with fast-zapping. Going the extra mile, we also work to standardise metadata delivery through the invention of Enriched Media Streaming Solution (EMSS).

Want to deliver high-quality online video experiences to your viewers, efficiently?

We’d love to talk about how we can help you with your video player, low latency live delivery and advertisement needs.