Share this

Streaming Latency: Protocols and Zapping Time

by Johan Vounckx on March 11, 2021

Previously, we’ve explored the different definitions of latency and when it is important and what causes it, in this blog we will explore how popular streaming protocols fare against each other. Additionally, we will also discuss one other factor that plays a big role in allowing you to provide the best viewing experience.

This is a snippet from our "A Comprehensive Guide to Low Latency" guide which you can download here.

Latencies of different protocols

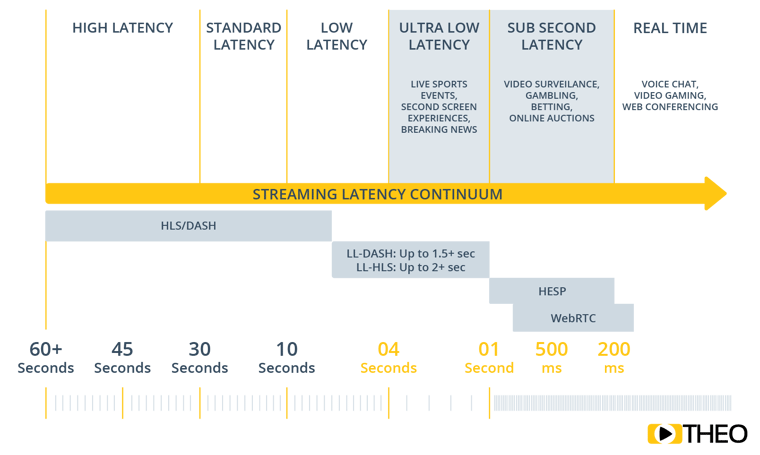

The different streaming protocols all have a different glass-to-glass / protocol latency. The table below gives an impression of the capabilities of the different protocols:

Figure 1 - Streaming Latency Continuum

Figure 1 - Streaming Latency Continuum

We see that the use of the traditional DASH and HLS protocols leads to large latencies. These latencies can be reduced by shortening the segments. But the latency remains high because a segment is handled as an atomic piece of information. Segments are created, stored and distributed as a whole. LL-DASH and LL-HLS overcome this problem by allowing a segment to be transferred piece-wise. A segment does not need to be completely available before the first chunks or parts of the segment can be transferred to the client for playback. This significantly improves the latency.

For ultra-low latency, approaches are needed that allow for a continuous flow of images that are transferred as soon as they are available (rather than grouping them in chunks or segments). This can be done using webRTC and HESP (HESP Webinar & Whitepaper), THEO Technologies’ next generation streaming protocol. HESP using Chunked Transfer Encoding over HTTP whereby the images are made available to the player on a per image basis. That ensures that images, extremely rapidly after they are generated, are available at the client for playback.

What about zapping time?

The zapping time is also important. For DASH and HLS this is a trade-off with the latency since playback can only start at segment boundaries. That implies that a player needs to choose between waiting for the most recent segment to start or starting playback of an already available segment. In case latency is not critical this allows for a very fast startup. If latency is critical, there is a penalty for the startup time.

WebRTC allows for a shorter zapping time, but still is bound to GOP size boundaries.

HESP allows for ultra-low start and channel change times, without compromising on latency. HESP does not rely on segments as the basic unit to start playback. HESP can start playback at any image position. As explained in (reference), HESP uses range requests to tap into the stream of images that is made available for distribution as soon as they are created.

Low latency and fast zapping is fine, but scalability is equally important, especially for video services reaching out to tens or hundreds of thousands of concurrent viewers. HTTP based approaches (DASH,LL-DASH, HLS, LL-HLS, HRSP) have an edge over webRTC, since HTTP based approaches ensure the highest possible network reach, can tap into a wide range of efficient CDN solutions and achieve scalability by file servers. WebRTC on the other hand relies on active video streaming between server and client and supports much less viewers on an edge server compared to a regular CDN edge cache.

In our next blog post, we are going to talk about the low latency use cases and its real life examples. You can also download the complete version of this topic in our “A COMPREHENSIVE GUIDE TO LOW LATENCY” guide here.

Want to talk to us about streaming latency? Contact our THEO experts.Share this

- THEOplayer (45)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- SDK (19)

- THEO Technologies (19)

- THEOlive (17)

- best video player (17)

- html5 player (16)

- LL-HLS (15)

- cross-platform (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- client-side ad insertion (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native SDK (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad insertion (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- React Native (1)

- Real-time (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)