Share this

Basics of Video Encoding: Everything You Need to Know

by THEOplayer on February 20, 2020

What is video encoding? In simple terms, encoding is the process of compressing and changing the format of raw video content to a digital file or format, which will in turn make the video content compatible for different devices and platforms. The main goal of encoding is to compress the content to take up less space. We do this by getting rid of the information we don’t need, also referred to as a lossy process. When the content is played back, it is played as an approximation of the original content. Of course, the more content information you get rid of, the worse the video that is played back will be, compared to the original. The process of video encoding is imposed by codecs, which we will discuss in this post.

Why is Encoding Important?

Video encoding is important because it allows us to more easily transmit video content over the internet. In video streaming, encoding is crucial because the compressing of the raw video reduces the bandwidth making it easier to transmit, while still maintaining a good quality of experience for end viewers. If all the video content was not compressed, available bandwidth on the Internet would be inadequate to transmit all of it and prevent us from deploying widespread, distributed video playback services. The fact that we can stream video on multiple devices in our homes, on-the-go using mobile, or even while video chatting with loved ones across the globe, even with low bandwidth, is owed to video encoding.

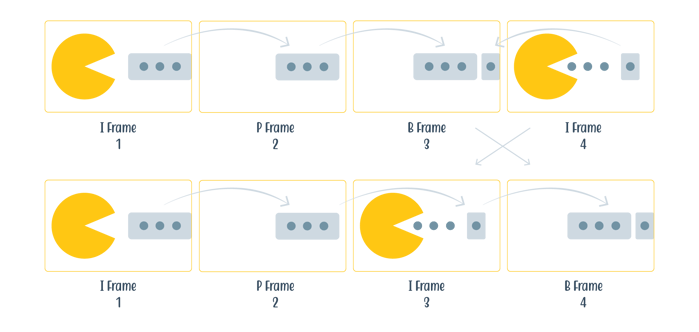

Motion Compensation

In video encoding, motion is very important. We most often express this in I frame (or keyframe), P frame and B frame. The keyframe stores the entire image. In the next frame or the P frame, when noticing not much has moved or changed, the P frame can refer to the previous keyframe, as only some pixels have moved. I, P and B frames form groups of pictures (GOPs) together, and frames in such a group can only refer to each other, not frames outside of it. For frames with not a lot of movement, this can save about 90% of the data storage that you would need to store a regular image (if it would use a lossless data compression like in PNG files), which are very big.

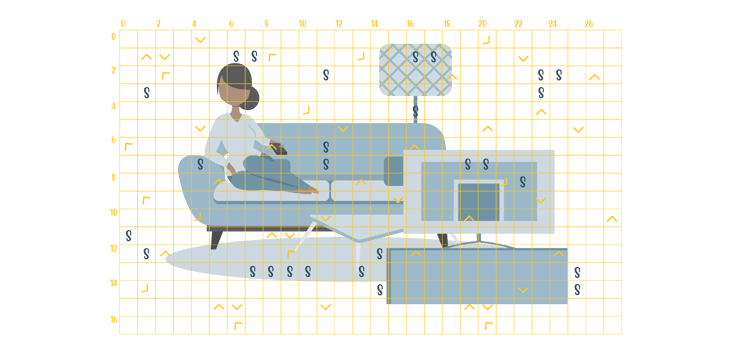

Macro Blocks

Within each frame, there are macroblocks. Each block has a specific size, colour and movement information. These blocks are encoded somewhat separately, which leads to proper parallelization. Previously, codecs such as H.264 only allowed block sizes of 4x4 samples or 8x8 samples, however newer codecs have now allowed for more block sizes as well as rectangular form. Large blocks are used when there isn't a lot of detail needed in the block, and only using a large block saves a lot of space, rather than just having many small blocks.

Macroblocks consist of multiple components. There are sub-blocks whose purpose is to give pixels colour information. There are also sub-blocks that give the vector for motion compensation compared to the previous frame. Due to this macroblock structure, in low bandwidth situations there can be sharp edges or "blockiness" visible within the video content. There are ways around this by adding a filter that smooths out these edges. The filter is called an "in-loop" filter, and is used in the encoding and decoding processes to ensure the video content stays close to the source material.

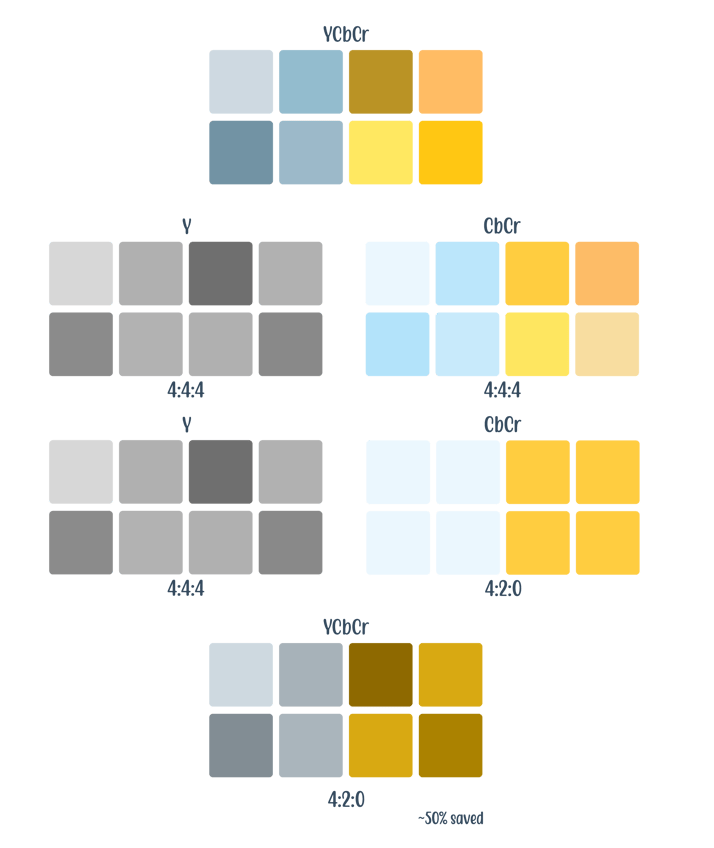

Chroma Subsampling

In most cases, we divide a color in RGB channels, however, the human eye detects changes in brightness much more quickly than changes in color, especially in moving images. Therefore, in video, we use a different color space called YCbCr. This colour space divides into:

- Y (luminance or luma)

- Cb (blue-difference chroma)

- Cr (red-difference chroma)

- luma = brightness, chroma = color

In chroma subsampling, we split images in their Y channel and their CbCr channel. For example, from an image we take a grid of two rows with four pixels each (4x2). In the subsampling we define a ratio as j:a:b.

j: amount of pixels sampled (i.e. width of grid)

a: amount of colors (CbCr) in the first row

b: amount of color (CbCr) changes in the second row, in comparison to the first row.

In streaming video, 4:4:4 is the full colour space. Reducing it to 4:2:2 saves 30% of space, and reducing it further to 4:2:0 saves 50% of space. In video streaming (think your Netflix and Hulu TV shows), the most commonly and widely used is 4:2:0. In the video editing space however, 4:4:4 is the most common.

Quantization

When discussing the encoding of video, we refer to more than just saving space with the image components, but also with the audio components. Audio is a continuous analog signal, but for encoding, we need to digitise this. Once the audio is digitised, we split it up into multiple sinusoids, or sinus waves, each of which represents an audio frequency. To save space, we can discard frequencies that we do not need.

If we take an image, we can also see rows of pixels behind one another as one large signal. Just like audio we remove frequencies in the image, known as frequency domain masking. Removing frequencies does lead to a loss of detail, but you can remove a fair amount of frequencies without it being noticeable to the end viewer. This process is known as quantization.

What are Codecs?

Codecs are essentially standards of video content compression. Codecs are made up of two components, an encoder to compress the content, and a decoder to decompress the video content and play an approximation of the original content. An enCOder and a DECoder, hence the name codec.

Audio Codecs

For audio, AAC is seen as the de-facto standard in the industry. AAC is essentially supported everywhere, and has the largest market share. Other audio codecs include: Opus, Flac and Dolby Audio. Opus excels in voice and is also used by YouTube, seemingly the only large service using it, but still it falls back to AAC. Dolby Audio, also known as AC3 is sometimes still used in instances for surround sound, as some older surround sound systems don’t play AAC.

Video Codecs

H.264/AVC:

H.264, also called AVC (Advanced Video Coding) or MPEG-4 AVC, was standardised in 2003. The codec was developed by MPEG and ITU-T VCEG, under a partnership known as JVT (Joint Video Team). It is supported virtually everywhere, on any device, while still providing a quality video stream, and is seen as a baseline for newer codecs. It also is relatively easy concerning royalty fees.

H.265/HEVC

H.265, also known as HEVC, which stands for High Efficiency Video Encoding, is a standard by MPEG and ITU-T VCEG (under a partnership known as JCT-VC). This codec was first standardized in 2013, and was eventually expanded on from 2014 through 2016. The goal with H.265 is to improve the content compression by 50% compared to H.264, all while keeping the same quality.

A Netflix study showed improvements that ranged from 35-53% when comparing it to H.264, and improvements of 17-21% when comparing it to VP9.

**keep in mind that the encoder, content, etc., matters a lot for these kinds of comparisons.

These improvements were achieved by optimising the techniques that already existed in H.264. Essentially, H.265 compresses the content into smaller files than possible in H.264, and in return decreases the required bandwidth that would be needed to play the video content. Although this is all great news, H.265 is still very rarely used. Why? The main issue is the uncertainty around licensing and royalties.

VP9:

VP9, the successor of VP8, was developed by On2Technologies, which is owned by Google. VP9 was standardised in 2013. This codec is similar to HEVC, however no royalties are required. Where those in the industry run into difficulties with VP9 is that, while it is widely supported on all major browsers and android devices, it is not supported by Apple or any of Apple’s devices. They instead support H.264 and H.265.

AV1:

In 2015, the Alliance for Open Media (AOMedia) was formed. During this time Google was working on VP10, Mozilla (Xiph) was working on Daala and Cisco was working on Thor. Instead of creating three separate codecs and frustrated by the limitations of royalties, they decided to join, therefore AV1 was created. Together, their goal was to attain 30% more efficiency than shown previously in VP9, but just like VP9, to also remain royalty free. All AOMedia members offered up their related patents to contribute to a patent defense programme.

While the AV1 codec is finalised, there is still work being done, but it seems the codec is starting to be adopted by big industry players and will continue to be in the future.

Future of Codecs

The current state of codecs seems relatively simple: AAC for audio, and H.264 are necessary for a broader reach. H.264 is a fallback for older devices, VP9 has no iOS support and AV1 still has a way to go before it can be put into regular production and usage. So in the cases where just one codec won’t suffice, what is the solution? The multi-codec approach is a must in these situations. For example, Twitch, today’s most popular video live streaming service, currently uses H.264 and VP9, but are hoping to switch to AV1 in the future.

Another example is Netflix, who have just recently announced that they have started streaming AV1 on their Android App, which boasts a 20% increased compression efficiency over VP9. However, it's only enabled in places where CPU power is way cheaper than bandwidth, for example when a viewer is streaming over 4G. Netflix is aiming to use AV1 for all platforms in the future.

VVC

Versatile Video Coding, or VVC is a new standard by MPEG. The goal of VVC is to achieve 50% efficiency over HEVC, along with other improvements. However, in reality, most as expecting to see a 30-40% efficiency over HEVC. VVC will provide better support for gaming and screen sharing, 360º video and resolution switching in video.

EVC

Essential Video Coding, or EVC is another new standard by MPEG, with the goal to achieve the same or similar efficiency as that of HEVC. EVC will be suitable for realtime encoding and decoding. This standard is made specifically for offline encoding for streaming VOD and live OTT streaming, and aims to be a “licensing-friendly” codec.

Hybrid Codecs

A hybrid codec is essentially a codec which works on top of another codec. The process usually follows these steps:

usual steps:

- take an input video

- use proprietary downscaler on the video (e.g. go from 1080p to 480p)

- encode downscaled video

- decode the video on the player

- upscale the decoded image using proprietary upscaler

This can often result in a 20-40% bitrate reduction.

P+ (Previously known as PERSEUS Plus)

P+, created by V-Nova, is being actively used today. For example, the use of this hybrid codec can give a 30-50% bandwidth reduction while using it on top of the H.264 codec. Perseus has the advantage of saving in the scale of HEVC without having to redo the whole encoding pipeline, and it also has hardware decoding on iOS and Android. With that said, using P+ to decode on the web can be challenging, drains the battery, and does not currently work with DRM, but that will change. This hybrid codec is currently being rebranded as LCEVC, which should work with DRM once integrated into hardware.

ENHANCEplayer

ENHANCEplayer is a joint and still ongoing innovation project between Artomatix and THEO Technologies. The hybrid codec utilises Artomatix’s ‘Artomatix Enhance AI to upscale the image resolution, remove compression artifacts and remove the noise. This essentially means "superresolution" generation with a neural network (NN) or an AI. The NN is trained to enhance the image and add details which were lost during compression. The goal is to reach 40ms to get a framerate of 25fps. The biggest challenges are with fidelity, meaning the image before compression is the same after enhancement, and it is still difficult to do while DRM is in play.

Do you have more questions about video encoding or want to know more about what’s to come? Get in contact with one of our THEO experts to get personalised information and advice.

Share this

- THEOplayer (46)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- THEO Technologies (20)

- SDK (19)

- THEOlive (17)

- best video player (17)

- cross-platform (16)

- html5 player (16)

- LL-HLS (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- client-side ad insertion (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- React Native SDK (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- server-side ad insertion (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- Real-time (1)

- SGAI (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- company news (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- server-guided ad insertion (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- November 2024 (1)

- August 2024 (1)

- July 2024 (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)