Share this

HTTP Live Streaming (HLS)

by THEOplayer on June 16, 2020

HLS, or HTTP Live Streaming, is an adaptive HTTP-based protocol and it was initially created and released by Apple in 2009 to solve the problems of efficient live video and VOD delivery to viewers’ devices, especially Apple devices. One of the main focus points was the problem of scaling, as faced with protocols such as RTMP and RTSP (used for Flash). Over the last years, the HLS protocol has become one of the most popular protocols, for both compatibility and quality of experience, and is widely supported on major devices, browsers and platforms. In this blog post we will discuss exactly how HLS works, the adaptive bitrate process it uses, the latency introduced by using HLS, why the spec is widely used across the industry, as well as how we can combat latency.

How does HLS work?

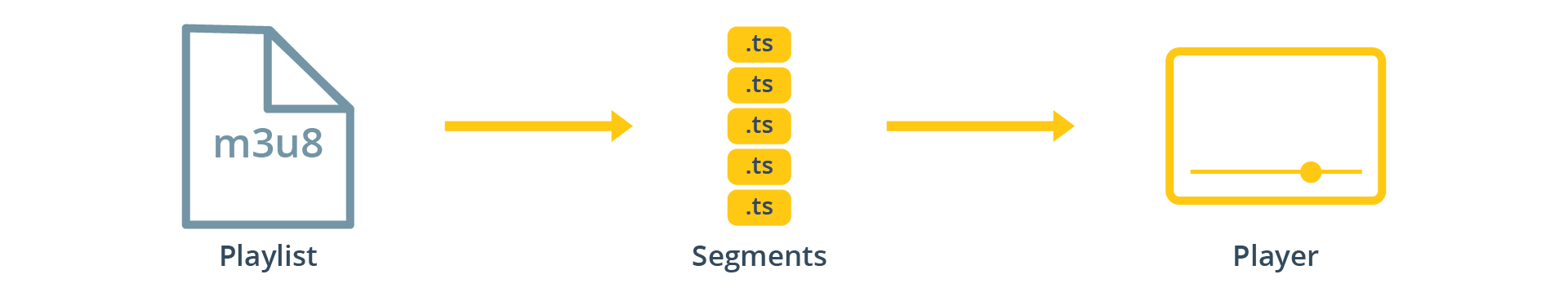

As it caused one of the biggest scaling issues for RTMP, HLS removed the need for an open connection, and instead uses HTTP served and cacheable files. On a high level, HLS is fairly simple: a video stream is split up in small media segments, meaning that instead of sending out a continuous file, small files are being made with a certain length. The maximum length of such a segment in HLS is called the Target Duration. A player would then need to download these segments one after the other, and simply play them in order within a playlist.

Initially, the container format for HLS was MPEG-2 Transport Stream, but in 2016 fragmented MP4 (fMP4) support was added, which is the preferred format today for HTTP-based streaming, including MPEG-DASH and Microsoft Smooth Streaming.

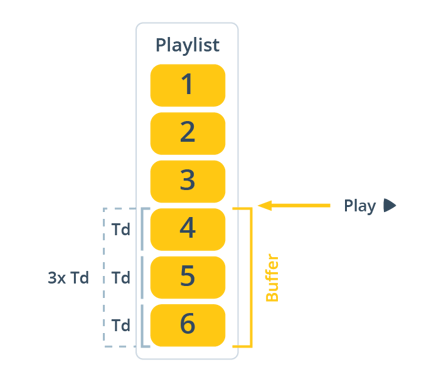

Fig. 1 - Transmission of segments to the player

For players to be able to identify which segment should be downloaded, HLS makes use of a Playlist file. These files list the segments in order. For live streams, new segments will be added at the end of the Playlist file. When a player updates the Playlist file (the protocol dictates it should be reloaded about every Target Duration), it will see the new segments listed and can download and play them.

HLS achieves scalability through the use of a Content Delivery Network (CDN) and just ordinary web servers. CDNs can help and adapt streams for a high spike in viewers, for example during a live sporting event. This was not the case for streaming protocols like RTMP, where CDN support is starting to decline quickly.

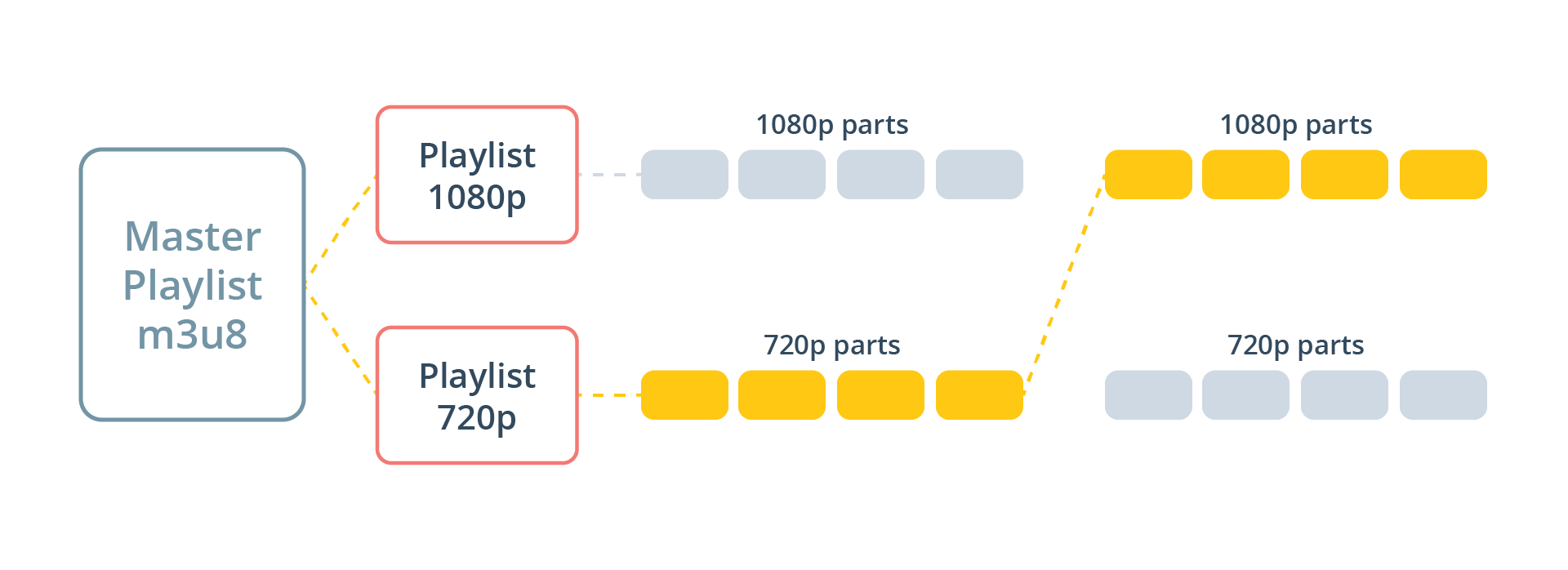

ABR Switching and HLS

HLS supports an adaptive bitrate process called adaptive bitrate switching, which allows for a quality stream based on the viewers’ internet connection, as well as the ability to switch bitrates at any time. To allow for adaptive bitrate switching, HLS Playlists are grouped in a Master Playlist which can link to different streams, allowing for a player to choose the stream with the bitrate and resolution best suited for its network and device. Having multiple streams prevents interruptions and buffering during the stream if there is a need to adjust for the bandwidth, adapting to each viewer's circumstance.

Apple provides General Authoring Requirements and suggested targets for encoding and HLS streamhere.

Fig. 2 - ABR switching between HLS manifests based on the network and device.

What Causes Latency with HLS?

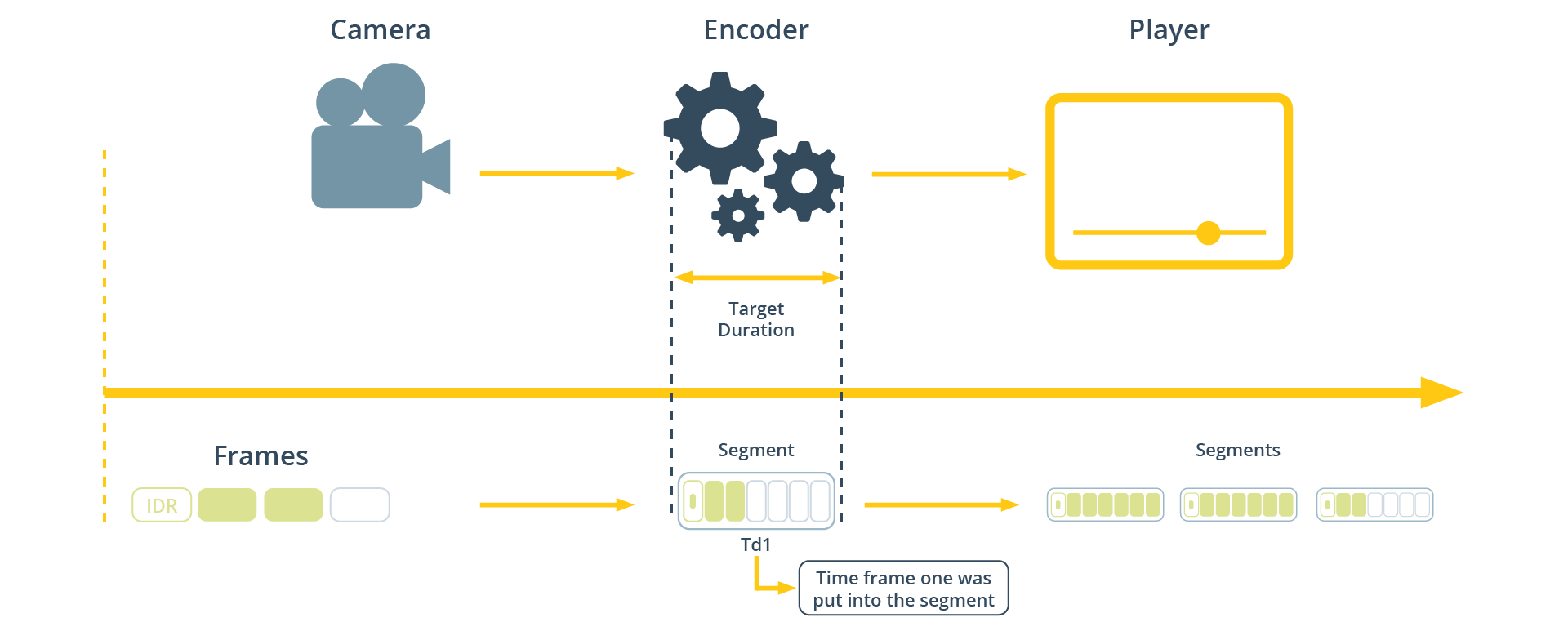

The latency introduced by HLS is related to the Target Duration. In order for a streaming server to list a new segment in a playlist, this segment must be created first. As such, the server needs to buffer a segment of ‘Target Duration’ length, before publishing it. Worst case scenario, the first frame a player can download is already ‘Target Duration’ seconds old.

Fig. 3 - Segments generation and Target Duration

Segments usually have a duration ranging from 2 to 6 seconds (this used to be 10 seconds up until June 2016). Most streaming protocols, HLS included, have determined the need for a healthy buffer of about three segments, and also a fourth segment usually being buffered. This is supposed to allow for robustness in case of network or server issues. The reasoning here is that segments need to be encoded, packaged, listed in the playlist, downloaded and added to the player’s buffer as a whole. This often results in 10-30s latencies. This is of course ignoring any encoding, first mile, distribution, and network delays. To read more about the full architecture of HLS, here is Apple’s overview.

Why Should You Use HLS?

HLS should be used anytime when quality of experience for the viewer is the first priority. Because the spec is widely adopted across devices, browsers and platforms, it’s compatibility makes it a go-to for a majority in the industry. HLS is widely supported on: iOS, Android, Google Chrome, Safari, Microsoft Edge, Linux, Microsoft and smart TV platforms.

How Can We Combat Latency?

In the HLS spec, having smooth, scalable playback is prioritised over having low latency. Because HLS uses HTTP served and cacheable files, a significant latency is introduced to the stream, and while HLS was becoming more widely adopted, the market changed from not just needing a scalable approach, but also needing lower latencies. Strategies and improvements were suggested to combat latency, and people were actively looking for solutions to deliver video in real-time. In our next blog, we will discuss how the need for lower latencies and near “real-time” video streaming gave the industry the solutions to provide Low Latency HLS.

Interested in learning more about Low Latency Streaming?

Watch our Webinar: Low Latency

Hosted by our CTO Pieter-Jan Speelmans and VP of Innovation Johan Vounckx

Contact our LL-HLS Experts

Share this

- THEOplayer (46)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- THEO Technologies (20)

- SDK (19)

- THEOlive (17)

- best video player (17)

- cross-platform (16)

- html5 player (16)

- LL-HLS (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- client-side ad insertion (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- React Native SDK (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- server-side ad insertion (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- Real-time (1)

- SGAI (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- company news (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- server-guided ad insertion (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- November 2024 (1)

- August 2024 (1)

- July 2024 (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)