The media industry is constantly pushing to innovate in order to improve viewer experience in an attempt to attract and tie customers to their service. This is however hampered by technical hurdles like high- latency, mind boggling video start times and bandwidth constraints. With the High-Efficiency Streaming Protocol (HESP), a massive leap forward is made possible. The new protocol enables streaming services to be delivered at scale with a significantly reduced bandwidth and with a sub-second latency. This already impressive list of improvements is further complemented with a significantly reduced zapping time, allowing to push the viewer experience and engagement to the next level.

A Continuous State of Flux

Media used to be simple: you had a contract with a telco or cable MSO, running a TV signal to your house over multicast/broadcast. Intending to innovate and reach retail devices striving to better engage audiences, HTTP Adaptive Streaming (HAS) protocols with adaptive bitrate (ABR) capabilities were leveraged. As a result, the media experience on those devices took a step backwards compared to broadcast delivery in relation to latencies and zapping times, as well as throwing up a number of technical hurdles in regards to scalability.

With a rise of OTT-only and cable cutting services, all streaming services are battling for the attention of their audiences. The strategy behind most approaches remains the same: bringing an impeccable viewer experience and engaging viewers to tie them to the service, preventing them from churning to an army of alternatives.

Current streaming services are, however, struggling with a number of different industry challenges. One such challenge became very apparent during the 2018 FIFA World Cup. High latencies for streams delivered using HAS protocols resulted in spoiled experiences for viewers. This was due to neighbours watching on broadcast services, and shouting loudly, or push notifications and tweets arriving before the start of an attack was even visible to some viewers.

Another common problem, which clearly shows an area where HAS based services can catch up with broadcast, is that of long zapping and buffering times. Where broadcast systems are often able to pick up the multicast signal in a fraction of a second, and continue playing without a glitch or hiccup. Loading times for online unicast streams are significantly higher. Customers of traditional PayTV service providers are expecting to access those services no longer on Service Provider provided Set-top-boxes but increasingly on a wide range of retail devices. These retail devices require service providers to adopt HTTP Adaptive Streaming (HAS) protocols, however this results in a significant step back in terms of video delivery user- experience.

Besides these obvious drawbacks on a user experience side, there are a few other important challenges with existing technologies. In an attempt to attract viewers, some streaming services have started to offer higher quality video content, boasting full HD and 4K video streaming at a time where most services are still delivering 720p streams. However the cost aspect of such business decisions is not to be underestimated. With 1080p images being 2.25 times bigger, and 4k being 4.5 times bigger compared to 720p, the required bandwidth is soaring to new heights. As a direct result, cost of delivery is increasing significantly.

However the cost aspect of such business decisions is not to be underestimated. With 1080p images being 2.25 times bigger and 4K being 4.5 times bigger compared to 720p, the required bandwidth is soaring to new heights.

This problem is aggravated as streaming services are growing their audiences and media consumption is significantly increasing. Based on a Cisco study, by 2022, it is expected that online video will attribute to more than 82% of consumer internet traffic. The one advantage HAS protocols can leverage, is the use of standard HTTP-based CDNs to deliver media at scale. The egress cost for media services on these CDNs will however increase as they grow their audience, and consumption increases. Interestingly, not growing the audience is often not an option with a trend emerging where larger services manage to spend bigger budgets on content and technology growth, reducing churn and attracting new audiences from smaller services.

The solutions used today

The origin of most of the challenges outlined in the previous section are the result of adoption of HTTP adaptive streaming protocols. As generally understood, these protocols work by splitting long streams in short segments. These segments are generated within the packager and listed within a manifest file, after which they can be distributed over standard HTTP CDNs. Each segment starts with a keyframe, allowing playback to start immediately when the segment is loaded. This however results in a substantial increase in the bandwidth required to deliver the video.

There are however also some downsides to this approach. In most cases, to load segments, players need to set up connections to the server, load the manifest, start loading the segment, and push these into the playback buffer. As Internet connections are often unstable, we require in the player large buffers to absorbe reasonable amounts of bandwidth fluctuations and guarantee smooth video playback. This results in an increased latency and zapping/start-up time.

There are a few solutions for the challenges outlined in the previous section which are being leveraged by recent low-latency proposals:

-

Another often seen approach to optimise delivery and reducing latency is shifting towards non-HTTP based (and less cacheable) protocols like Real-Time Messaging Protocol (RTMP), Web Real-Time Communications (WebRTC) or WebSockets. As these protocols often do not rely on HTTP, they can not be delivered using traditional CDNs and as a consequence require a dedicated and costly infrastructure to scale. Quite often these protocols have also other drawbacks compared to standard HAS protocols, such as the inability to dynamically adapt to the network connectivity changes like with the HAS Adaptive Bitrate (ABR) algorithms. Another solution that has seen quite some industry attention is the use of Chunked Transfer Encoding (CTE) over HTTP in conjunction with MPEG’s Common Media Application Format (CMAF) based DASH, often referred to as DASH CMAF-CTE. This is however only a partial solution as one has to effectively trade-off the latency and start time with the bandwidth overhead, because the principal mechanism used is the shortening or the lengthening segments and chunks.

-

In order to battle bandwidths, we see more and more experiments within the market to deliver content using alternative codecs , like H2.65/ HEVC, AV1 and VP9. While looking very promising, there are a few drawbacks on this approach still. There are no next generation codecs which are already available across most used devices. As a result, this approach often requires a secondary encoding to be made, packaged and distributed. Some positive news in this area however is that for some use-cases, a business case can already be made to justify this increased complexity.

In summary, these solutions tend to increase significantly the costs for the service provider due to:

- increased bandwidth;

- parallel encoding and caching workflows;

- non HTTP origins and caches.

The High Efficiency Streaming Protocol

In order not to optimise a single aspect of the problem, and having to make a trade off like with current approaches, THEO developed a radically new HTTP Adaptive Streaming protocol. It was designed to improve user experience and engagement by:

- significantly reducing latency to allow for sub second latency;

-

shortening zapping times to about 100ms to allow for similar to traditional broadcast experiences;

-

bringing down bandwidth costs and optimising viewer bandwidth usage up to 20%;

-

while still being scalable using HTTP CDNs, allowing for virtually endless scaling in a cost efficient manner.

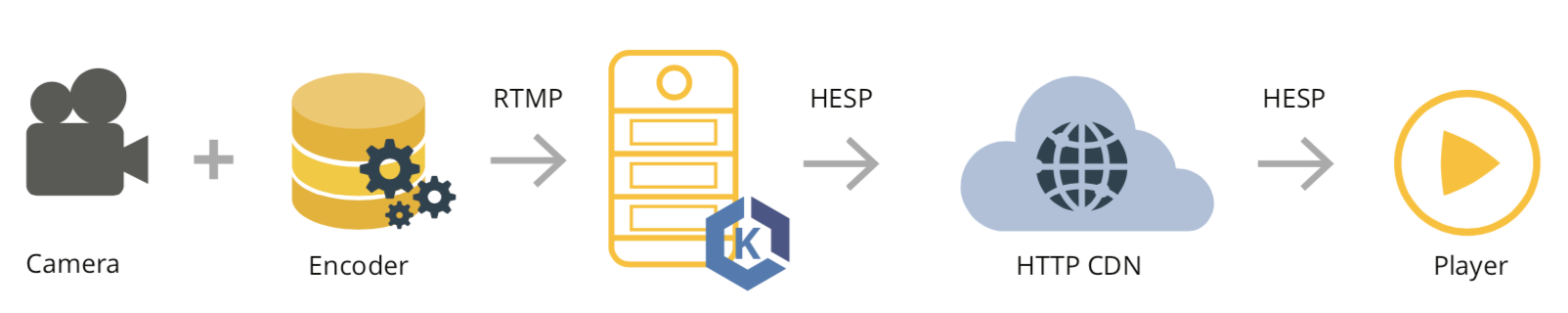

As additional requirements, this protocol was designed to be integratable in existing video delivery pipelines and workflows, meaning it is compatible with existing encoders and 3rd party CDNs, only requiring changes within the packager and player.