Within our series of articles explaining the needs for, and ways to achieve low latency, we already discussed Chunked CMAF, a very promising technique which is actively being standardised and implemented by the industry at publication of this article. There are however alternatives which are often forgotten. One such approach is Low Latency HLS or LHLS.

What is LHLS?

LHLS is, as the name already describes, an adaptation of Apple’s HTTP Live Streaming (HLS) protocol which is often used within the industry. Historically, HLS was supposed to solve the problems of scaling which were faced in protocols such as RTMP. As a trade-off, HLS tended to trade in latency, resulting in latencies of tens of seconds and going even up to a minute.

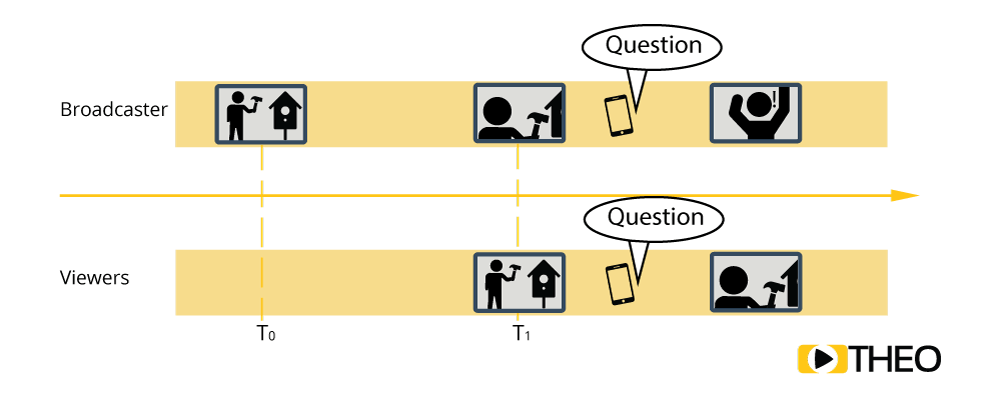

While researchers had been uttering improvements for a very long time and were presenting strategies in order to reduce this latency, it was Twitter’s Periscope which first implemented a number of these improvements and bundled them in LHLS. For the Periscope platform, which focuses on providing live streaming experiences where viewers can interact with broadcasters by commenting and sending hearts, latency is of utmost importance in order to improve the user experience. High latency would significantly impact the ability to interact. Take for example the use case where a broadcaster would be sending out a stream explaining how he is making a birdhouse. When a viewer would ask a question, and the question arrives with the broadcaster only 30 seconds after he explained something, context will be lost.

Fig. 1 - Latency example: question asked is not more relevant after 30 seconds

LHLS resolves these issues and aims to provide low latency in the two to five seconds range while still keeping some of the advantages of HLS such as its scalability. Furthermore, the protocol was to be compatible with HLS in such a way that default players across different platforms could fall back to standard HLS behaviour.

How does HLS work (in a nutshell)

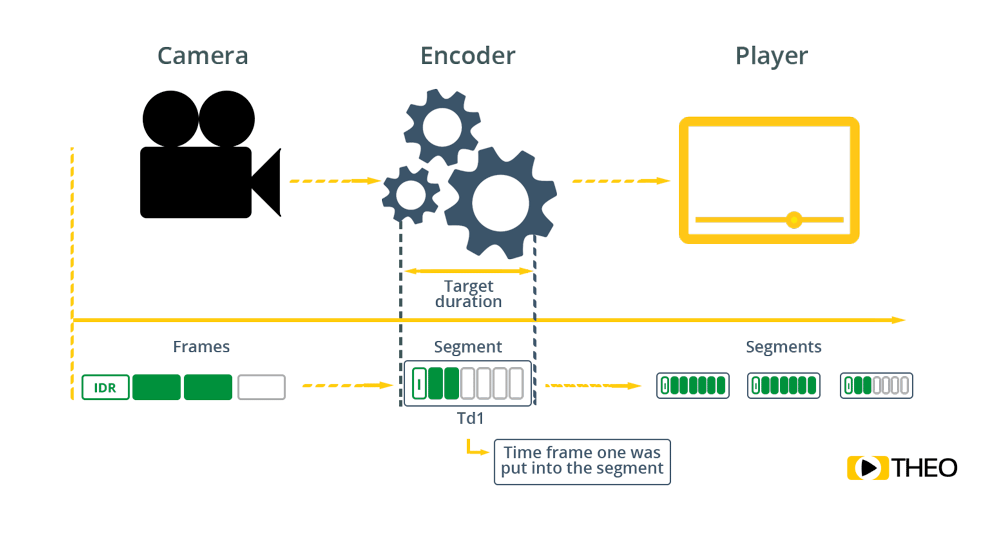

Before jumping in and explaining how LHLS works, let’s first take a step back and have a look at how HLS works and where its latency is coming from. The basis of HLS is fairly simple: a video stream is split up in small media segments, meaning that instead of sending out a continuous file, small files are being made with a certain length. The maximum length of such a segment in HLS is called the Target Duration. A player would then need to download these segments one after the other, and simply play them in order within a playlist.

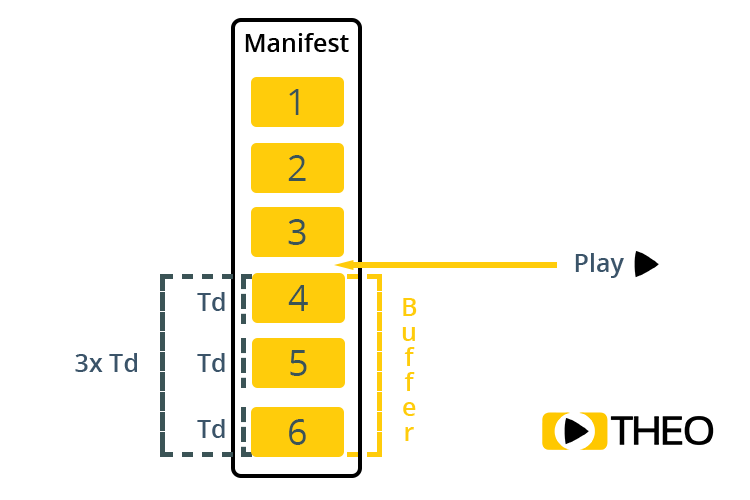

Segments usually have a duration ranging from 2 to 6 seconds. Most streaming protocols have determined a buffer of about three segments, and a fourth segment usually being buffered, is optimal for avoiding playback stalls. The reasoning here is that segments need to be listed in the manifest, encoded, downloaded and added to the buffer as a whole. This often results in 10-30s latencies.

.png)

Fig. 2 - Transmission of segments to the player

For players to be able to identify which segment should be downloaded, HLS makes use of a manifest file. These files list the segments in order. For live streams, new segments will be added at the end of the manifest file. When a player updates the manifest file (the protocol dictates it should be reloaded about every Target Duration), it will see the new segments listed and can download and play them. To allow for adaptive bitrate switching, HLS manifests are grouped in a Master Playlist which can link to different streams, allowing for a player to choose the stream with the bitrate and resolution best suited for its network and device.

II.png)

Fig. 3 - ABR switching between HLS manifests based on the network and device.

What causes latency with HLS?

The latency introduced by HLS is related to the Target Duration. In order for a streaming server to list a new segment in a manifest, this chunk must be created first. As such, the server needs to buffer a segment of ‘Target Duration’ length, before publishing it. Worst case scenario, the first frame a player can download is already ‘Target Duration’ seconds old!

Fig. 4 - Segments generation and Target Duration

The HLS specification also states a player should maintain a healthy buffer, and start playback three Target Durations from the end of the last manifest. This is supposed to allow for robustness in case of network or server issues. The result is another three Target Durations of latency, bringing the total to four Target Durations. Keeping in mind Apple still recommends a six second Target Duration (this used to be 10 seconds up until mid 2016), the latency introduced by the streaming protocol alone would be about 24s. This is of course ignoring any encoding, first mile, distribution, and network delays.

Fig. 5 - 3 segments need to be buffered according to the HLS specification

How does LHLS work?

Now we know the basics of HLS and the origin of the latency, let’s have a look at how LHLS works and how it resolves these problems. There are two important approaches which are being used in order to reduce latency within LHLS:

- Leveraging HTTP/1.1 chunked transport for segments

- Announcing segments before they are available

While another approach could have been to reduce the segment size, this has severe limitations. In a best case scenario, each segment starts with an Intra frame (IDR-frame), which allows a player to start playback of a segment immediately, without the need to wait and download an earlier segment. As I-frames are significantly larger than predicted frames (P-frames), reducing the segment size (and adding in more I-frames) would increase the overall bandwidth used.

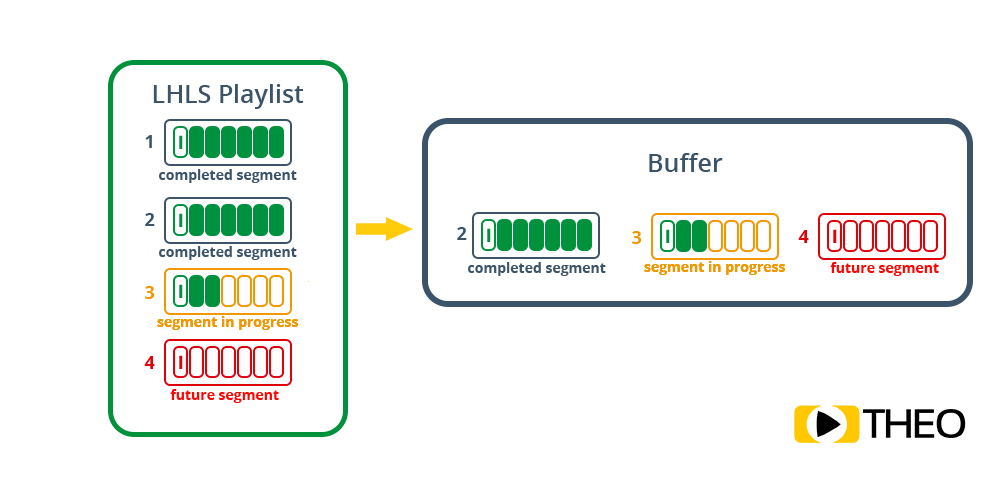

By leveraging capabilities which are available in chunked transport, segments can be downloaded while they are being created. Where normally video frames are buffered and aggregated until multiple seconds of video are available, chunked transport allows the server to make these frames available as they are being delivered by the encoder. As a result, a player could (given it knows where to find it) start loading a segment while it is being produced. This removes the delay originating in segment creation. A player can already request a non existing or incomplete segment, and get it streamed out actively as soon as new data is available. This is actually highly similar to the chunked CMAF approach. The difference here however, is that HLS traditionally makes use of MPEG Transport Streams, a streaming format which naturally comes in 188 byte chunks.

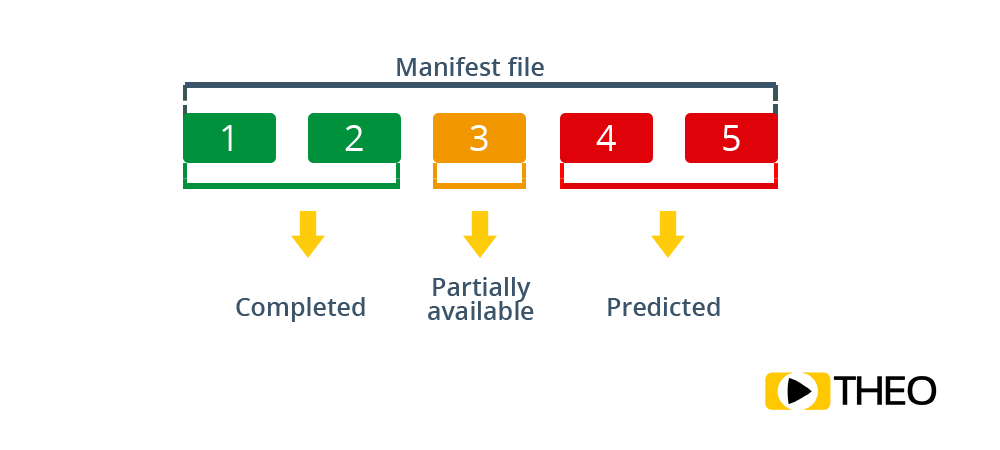

Fig. 6 - Buffer of segments in LHLS playlist

The second approach aims to reduce the latency introduced by the buffer offset. One of the reasons for this offset to exist, is because a player needs to both load the manifest as well as the actual segment before it can fill up its buffer. As such, a player should know the location for a segment as soon as possible. By anticipating segment creation, and already listing the location of future segments, a player can anticipate which files need to be loaded. As the player gets the previous segment streamed out with chunked transport, it can know when a segment becomes available: right after the previous one was downloaded fully. By announcing the segments early, players which are not LHLS aware can still play the stream as if it was a normal HLS stream and still get an improvement in latency.

Fig. 7 - Load of segments from a Manifest file

The result of these two approaches is simple, but effective. It removes both the latency introduced by segmenting the video stream, as well as the latency introduced by the manifest. Additionally, the approach is scalable over standard CDNs, given they support HTTP/1.1 chunked transfer.

The evolution of LHLS

The LHLS protocol is not a protocol which is frozen in a specification. While this brings some issues for commercial vendors, it also provides some options for further experimentation. A good example is the limitation of the early LHLS versions in regards to adaptive bitrate switching. As clients would receive media information at the rate it is being produced, bandwidth estimates would become more difficult to make. These estimates however are required to identify if a player should attempt to load a higher (or lower) bandwidth. By leveraging advanced algorithms, it is however possible to still make sound estimates of the ideal bandwidth, allowing to bring adaptive capabilities back to LHLS.

Another experiment which is proving interesting is leveraging HTTP/2 capabilities. For every segment, a player still needs to open a new connection on a new socket, which means CDNs could get overloaded with multiple active sockets. HTTP/2 however brings in the capability multiplex multiple requests into a single socket. As a result, the client and server can keep a socket open and remove the need to continuously set up new sockets.

Since the announcement of the teams at Twitter what they achieved with LHLS mid 2017, multiple parties within the industry have been attempting to replicate these results. While nobody appears to be attempting to standardise the approach, implementations of LHLS have been popping up more and more. The Streamline project for example provides an open source LHLS enabled server and describes how you can set up an end to end pipeline (https://github.com/colleenkhenry/streamline). It provides a solid base for anyone aiming to build their own LHLS pipeline.

On the player side, options are limited as well. With THEOplayer however we support chunked transfer with CMAF and LHLS. If you want to know more about achieving low latency, don’t hesitate to reach out.