Share this

A comprehensive Guide to Low Latency

by THEO Technologies on February 21, 2021

Latency, low latency, ultra-low latency are becoming increasingly important. New developments like LL-HLS and CMAF-CTE both confirm and support this statement, in addition to other streaming protocols such as webRTC and RTMP. With all the technology and the definition of latency, it can be difficult to see the forest for the trees. So let us start with a few, all equally valid, definitions of latency.

.png?width=680&height=355&name=A%20comprehensive%20Guide%20to%20Low%20Latency%20(2).png)

WHAT IS LATENCY?

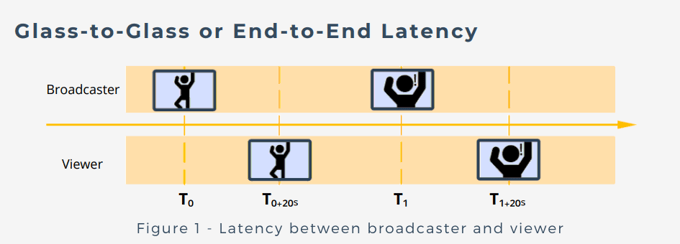

The most trivial definition of latency is the so-called glass-to-glass latency or the end-to-end latency. That is the time it takes between the moment that action happens (and is in front of the first glass, the camera) and the moment that a viewer sees this action on his screen (the other glass). This definition of latency is especially useful for the streaming of live and interactive events.

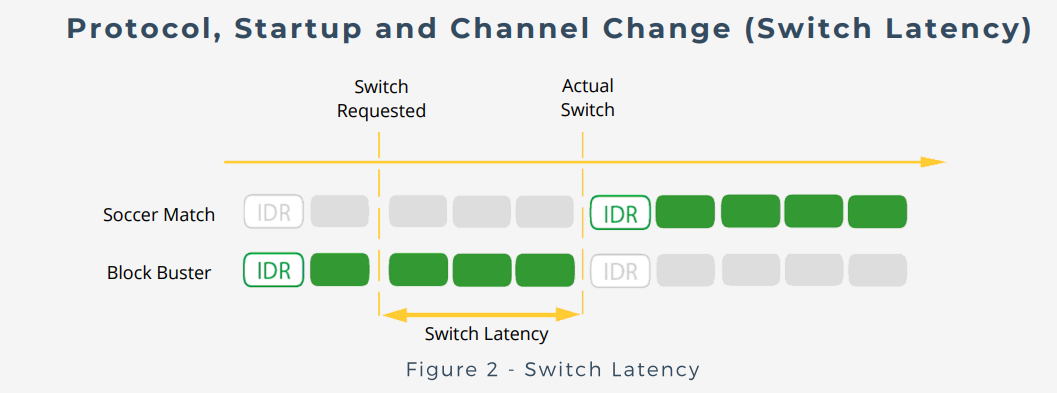

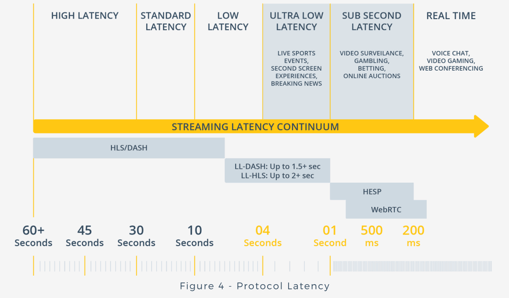

A second definition is the so-called protocol latency. That is the latency between the output of the encoder and the actual playback. This latency is interesting for low latency applications where we do not want to compromise the quality of the encoder. We also have the startup and channel change latency. That is the time it takes to start a video or to change channel, once the command has been given. This is an important parameter for streaming video applications that want to provide a leanback TV experience where viewers are used to instantaneously change channels with a simple push on a button.

NOT EVERY LATENCY IS EQUALLY IMPORTANT FOR EVERY USE CASE.

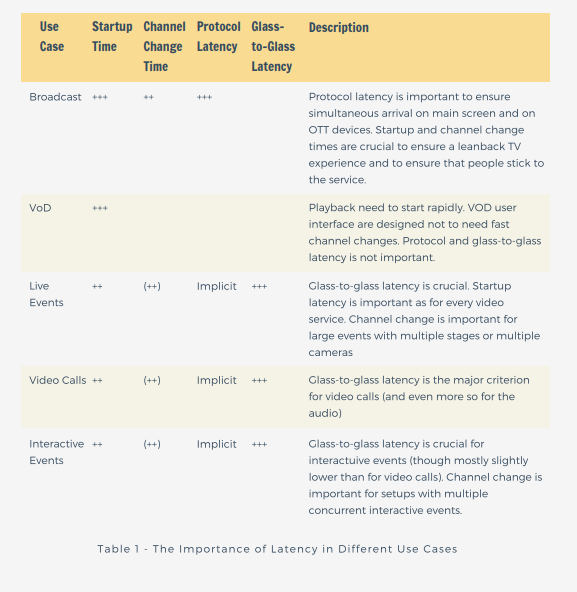

In the table below we indicate which latency really matters for five typical use cases:

WHERE IS LATENCY INTRODUCED?

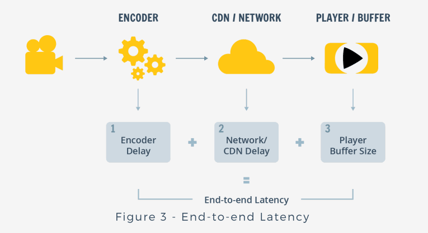

Latency is introduced at many different steps in the video distribution chain.- Firstly, the encoding/transcoding takes time with a direct impact on the glass-to-glass latency. A use-case dependent trade-off will be needed between latency, quality and bitrate. Typically, quality and (smaller) bitrate will be preferred unless for applications where the glass-to-glass latency is crucial.

- Secondly the distribution networks between source and playback device adds to the latency, as well glass-to-glass, protocol, startup and channel change latency. CDNs allow them to benefit from dedicated networks and to reduce the overall load on the distribution network by caching as much as possible.

- Thirdly, the player buffer adds to the latency. Players use buffers to cope with network variations and to avoid stalls. A trade-off is necessarily dependent on the importance of the latency and the quality of the network. This is also true for startup latencies and channel change times. One needs to define the minimal amount of buffered video before the playback actually starts.

- Overall the streaming protocol has a large impact on the different types of latency, because it defines how the video is divided into packets that are transferred and it directly impacts the buffer depth. Tuned or dedicated protocols are needed to achieve ultra low latencies and startup times.

The different streaming protocols all have a different glass-to-glass / protocol latency. The table below gives an impression of the capabilities of the different protocols:

We see that the use of the traditional DASH and HLS protocols leads to large latencies. These latencies can be reduced by shortening the segments. But the latency remains high because a segment is handled as an atomic piece of information. Segments are created, stored and distributed as a whole. LL-DASH and LL-HLS overcome this problem by allowing a segment to be transferred piece-wise. A segment does not need to be completely available before the first chunks or parts of the segment can be transferred to the client for playback. This significantly improves the latency.

For ultra-low latency, approaches are needed that allow for a continuous flow of images that are transferred as soon as they are available (rather than grouping them in chunks or segments). This can be done using webRTC and HESP (HESP Webinar & Whitepaper), THEO Technologies’ next generation streaming protocol. HESP using Chunked Transfer Encoding over HTTP whereby the images are made available to the player on a per image basis. That ensures that images, extremely rapidly after they are generated, are available at the client for playback.

Of course, this only gives one aspect of these protocols

The zapping time is also important. For DASH and HLS this is a trade-off with the latency since playback can only start at segment boundaries. That implies that a player needs to choose between waiting for the most recent segment to start or starting playback of an already available segment. In case latency is not critical this allows for a very fast startup. If latency is critical, there is a penalty for the startup time.

WebRTC allows for a shorter zapping time, but still is bound to GOP size boundaries.

HESP allows for ultra-low start and channel change times, without compromising on latency. HESP does not rely on segments as the basic unit to start playback. HESP can start playback at any image position. As explained in (reference), HESP uses range requests to tap into the stream of images that is made available for distribution as soon as they are created.

Low latency and fast zapping is fine, but scalability is equally important, especially for video services reaching out to tens or hundreds of thousands of concurrent viewers. HTTP based approaches (DASH,LL-DASH, HLS, LL-HLS, HRSP) have an edge over webRTC, since HTTP based approaches ensure the highest possible network reach, can tap into a wide range of efficient CDN solutions and achieve scalability by file servers. WebRTC on the other hand relies on active video streaming between server and client and supports much less viewers on an edge server compared to a regular CDN edge cache.

LOW LATENCY USE CASES

Video-on-Demand

VoD traditionally focuses on the highest possible quality for the lowest number of bits. Fast startup is the only latency metric that really impacts the user experience. Besides adopting the right streaming video approach, user interfaces are adopting latency hiding techniques to give the viewers the impression of instantaneous startup times. This includes prefetching the video or starting at lower qualities so that the video is transferred faster and starts earlier.

Broadcast

Broadcast traditionally focuses on quality of experience for a large audience. This calls for longer encoding times to ensure the best possible visual quality of the video for a given bandwidth budget, but also for fast start-up and channel change times and for scalability. Latency is becoming increasingly important as well, driven by the desire to have the playback on online devices occur at the same time as the existing broadcast distribution.

Therefore, this industry gradually moves to shorter segment sizes, and to LLDASH and LL-HLS. This still does not give a really good solution in combination with fast channel change though, because a trade-off will have to be made between start-up and channel change times on one hand than the latency on the other hand.

Live Event Streaming

Live event streaming critically depends on low glass-to-glass latency. Traditional HLS and DASH protocols are not satisfactory. Therefore, live event organizers are using WebRTC. This works fine for small audiences. The cost of scaling for WebRTC is high though. Consequently, live event organizers targeting mass audiences are looking for LL-DASH and LL-HLS when they can afford the increased latency. HESP brings an answer here, with slightly higher latencies than webRTC but the same scaling characteristics as any HTTP based approach, largely outperforming webRTC.

Bi-directional video conferences are using webRTC.

REAL-LIFE EXAMPLES

To make this more concrete we will zoom in on a few examples with a focus on

latency:

THEO reaches between 1 and 3 seconds latency with LL-DASH and LL-HLS depending on the player and stream configuration. In the past few months THEO engaged with several customers worldwide, both for PoCs and for real deployments, reaching latencies of around 2 sec in real life conditions.

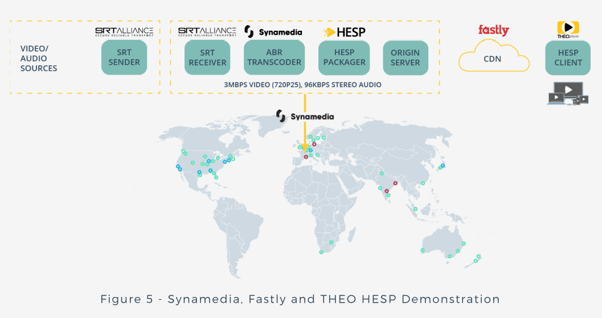

Synamedia, Fastly and THEO set up an end-to-end demonstrator with HESP, reaching out to a virtually unlimited number of viewers with sub-second protocol latency and zapping times well below 500msec [MHV 2020].

WebRTC is being used for video conference tools such as Google meet.

Youtube Live brings live content with a delay of several seconds.

Wowza has a hybrid system for live events, using WebRTC for a limited number of ultra-low latency critical participants and LL-HLS for the rest. [LL-HLS Webinar with THEO and Wowza]

CONCLUSION

Different applications come with different low latency expectations.

Existing technologies are typically designed to cover a range of latency needs. For stringent latency requirements (down to a few seconds) we need LL-HLS and LL-DASH. Sub-second latency at scale is made possible by HESP, the High Efficiency Streaming Protocol. WebRTC is capable of achieving even lower latencies, but often at the expense of quality of experience, and falls short when it comes to scalability to a large number of viewers.

Any questions left? Contact our THEO experts.

Share this

- THEOplayer (46)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- THEO Technologies (20)

- SDK (19)

- THEOlive (17)

- best video player (17)

- cross-platform (16)

- html5 player (16)

- LL-HLS (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- client-side ad insertion (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- React Native SDK (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- server-side ad insertion (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- Real-time (1)

- SGAI (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- company news (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- server-guided ad insertion (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- November 2024 (1)

- August 2024 (1)

- July 2024 (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)