Share this

LL-HLS Series: How Does LL-HLS Work?

by THEOplayer on June 30, 2020

To provide online video at scale, we use HTTP Adaptive Streaming Protocols such as HLS or MPEG-DASH, which are both extremely popular. In this article, we will provide some insights on how Apple’s Low Latency HLS (or LL-HLS) works.

HTTP Live Streaming

First, let’s try to repeat the basis of HTTP Live Streaming (HLS) in one paragraph. It is fairly simple: a video stream is split up in small video segments, which are listed in a playlist file. A player is responsible to load the playlist, load the relevant segments, and repeat until the video is fully played. To allow for dynamic quality selection and avoid buffering, different playlists can be listed in a “master playlist”. This allows players to identify the ideal bitrate for the current network, and switch from one playlist to another.

For more details on how HLS works, check out our blog post here.

How Does LL-HLS Work?

Apple recently extended HLS in order to enable it for lower latency. When HLS was developed in 2009, scaling the streaming solution received the highest priority, which caused latency to be sacrificed. As latency has gained importance over the last few years, HLS was extended to LL-HLS. As Rome wasn’t built in a day, the specification wasn’t either. It took some weird twists and turns (you can read all about it in our previous blog post), but it was finalized 30th of April with an official update of the HLS specification.

Low Latency HLS, aims to provide the same scalability as HLS, but achieve it with a latency of 2-8s (compared to 24-30 second latencies with traditional solutions). In essence, the changes to the protocol are rather simple.

-

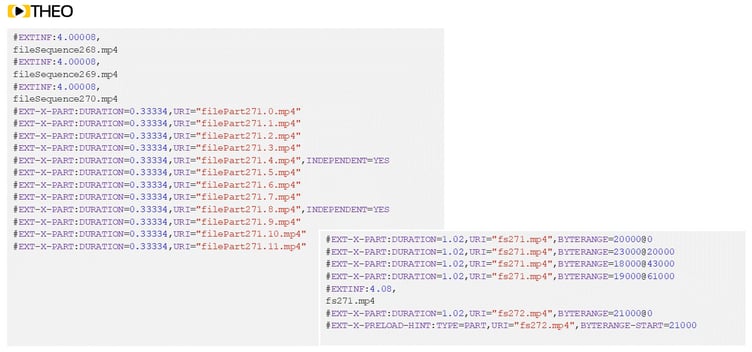

A segment is divided into “parts” which makes them something like “mini segments”. Each part is listed separately in the playlist. In contrast with segments, listed parts can disappear while the segments containing the same data remain available for a longer time.

-

A playlist can contain “Preload Hints” to allow a player to anticipate what data must be downloaded, which reduces overhead.

-

Servers get the option to provide unique identifiers for every version of a playlist, easing cacheability of the playlist and avoiding stale information in caching servers.

These three changes are at the core of the LL-HLS specification, so let’s go into each of these in some more detail (note: there are some other changes such as “delta playlists,” these are less crucial for the correct working of LL-HLS, but rather tend to optimize things).

HLS parts are in practice just “smaller segments”. They are noted with an “EXT-X-PART”-tag inside the playlist. As having a lot of segments (or parts) increases the size of the playlist significantly, parts are only to be listed close to the live edge. Parts also have no requirement to start with an IDR frame, meaning it is OK to have parts which cannot be played individually. This allows servers to publish media information while the segment is being generated, allowing players to fill up their buffers more efficiently. As a result, buffers on the player side can be significantly smaller compared to normal HLS, which in practice results in a reduced latency.

Parts can be addressed with unique URIs, or with byte-ranges. The use of byte-ranges in the spec allows for sending the segment as one file, out of which the parts can be separately addressed and requested.

Another new tag, called “EXT-X-PRELOAD-HINT”, provides an indication to the player which media data will be required to continue playback. It allows players to anticipate, and fire a request to the URI listed in the preload hint to get faster access. Servers should block requests for known preload hint data, and return it as soon as available. While this approach is not ideal from a server perspective, it makes things a lot simpler on the player side of things. Servers can still disable the blocking requests, but it is definitely recommended to support this capability. All of this sums up to a faster delivery of media data to players, reducing latency.

The third important update to the HLS specification is the ability to provide a unique naming sequence to media playlists through query parameters passed in the playlist URI. Using simple parameters, a playlist can be requested containing a specific segment or part. Players are recommended to request every playlist using these unique URIs. As a result, a server can quickly see what the player needs and provide an updated playlist if available. This capability is extended in such a way that the server can enable blocking requests on these playlists. Through this mechanism a server becomes empowered to provide the updated playlist as soon as it becomes available. Players detecting this capability can switch strategies to identify which media data will be needed in the future, decreasing the need for large buffers and… reducing additional latency.

As the protocol extension is backwards compatible with HLS, players which are not aware of LL-HLS capabilities will be able to still play the stream at a higher latency. This is important for devices which rely on old versions of player software (such as smart TVs and other connected devices) without the need of setting up a separate stream for devices capable of playing in low latency mode.

LL-HLS vs. LL-DASH

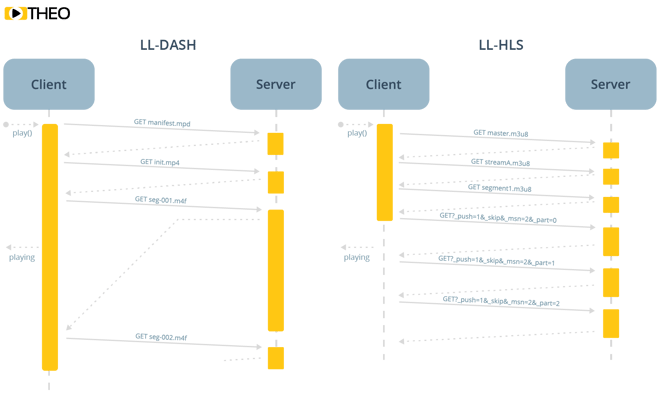

In contrast to LL-HLS, Low Latency DASH (LL-DASH), does not have the notion of parts. It does however have a notion of “chunks” or “fragments”. In LL-DASH, segments are split into smaller chunks (often containing a handful of frames), which are then delivered using HTTP chunked transfer encoding (CTE). This means the origin doesn’t have to wait until the segment is completely encoded before the first chunk can be sent to the player. There are some differences with the approach of LL-HLS, but in practice, it is quite similar.

A first difference is that LL-HLS parts are individually addressable, either as tiny files or as byte ranges in the complete segment. LL-DASH has an advantage here as it does not depend on a manifest update before the player can make sense of a new chunk. LL-HLS however allows for the flexibility to provide additional data on parts, such as marking where IDR-frames can be found and decoding can be started.

Interestingly, it is possible to reuse the same segments (and thus chunks and parts) for both LL-HLS and MPEG-DASH. In order to achieve this, segments should be created with large enough chunks/parts to support LL-HLS. By addressing the LL-HLS parts as byte-ranges inside a larger segment, the segment can be listed both in a LL-DASH manifest and an LL-HLS playlist. This way, each LL-HLS part can be provided to the client once it is available on the origin. Similarly, the origin for DASH, can provide the same data as a chunk to the player over CTE. While both a MPEG-DASH manifest and an HLS playlist would need to be generated, it allows duplicate storage of the media stream, which can lead to large cost savings.

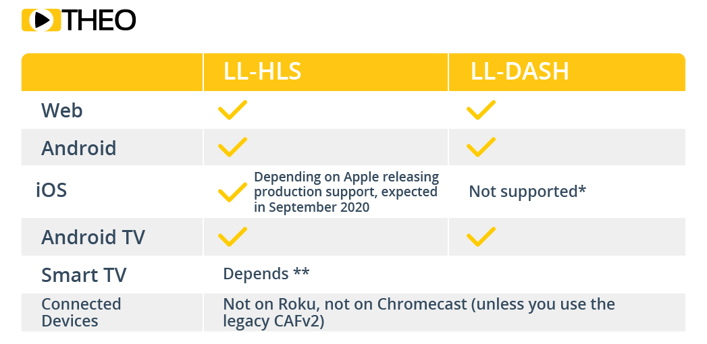

When we look at compatibility, LL-HLS and LL-DASH are mostly supported on the same platforms, but there are some important nuances. In the case of iOS, we don’t expect LL-DASH to be used in production due to the restrictions on the App Store. The trickiest platforms to support are predicted to be the SmartTVs and some connected devices. On connected devices, especially older devices, if you are restricted to native/limited players, you could face problems with support for that low latency use case as older versions of the software don’t support it and software updates on those platforms are rare.

What to Expect with LL-HLS Moving Forward

The LL-HLS preliminary specification has been merged with the HLS standard, which means that it’s safe for vendors to start investing into LL-HLS adoption. We anticipate other vendors will aim to start supporting LL-HLS in production in September, as it’s likely that Apple will announce LL-HLS will be a part of iOS14, a part of their new iPhone releases. During Apple’s WWDC 2020, which kicked off on June 22nd, Roger Pantos hosted a session on LL-HLS, which you can watch here. In the session, Pantos announced that LL-HLS is coming out of beta and will be available for everyone in iOS 14, tvOS 14, watchOS 7, and macOS later this year, most likely during the iPhone Event in September. You can also read about the updates from a previous blog. At THEO, we already have beta players up and running. If you’re looking to get started on implementing, you can talk to our Low Latency experts.

What's Next?

Now that you know the specification and how it works, how can you implement LL-HLS today? In our next blog we discuss implementation of LL-HLS. Subscribe to our insights so you don’t miss it.

Interested in learning more about implementing a Low Latency HLS solution?

On-Demand Webinar: Apple’s LL-HLS is Finally Here

Hosted by our CTO Pieter-Jan Speelmans

Contact our LL-HLS Experts

Share this

- THEOplayer (46)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- THEO Technologies (20)

- SDK (19)

- THEOlive (17)

- best video player (17)

- cross-platform (16)

- html5 player (16)

- LL-HLS (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- client-side ad insertion (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- React Native SDK (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- server-side ad insertion (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- Real-time (1)

- SGAI (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- company news (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- server-guided ad insertion (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- November 2024 (1)

- August 2024 (1)

- July 2024 (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)