Share this

LL-HLS Series: Implementing LL-HLS Today

by THEOplayer on July 9, 2020

In previous blogs we’ve covered how the LL-HLS spec has evolved and changed, as well as how it actually works. In this blog we want to discuss how the end-to-end solution would look, which use case the spec suits best and what THEO recommends for LL-HLS implementation.

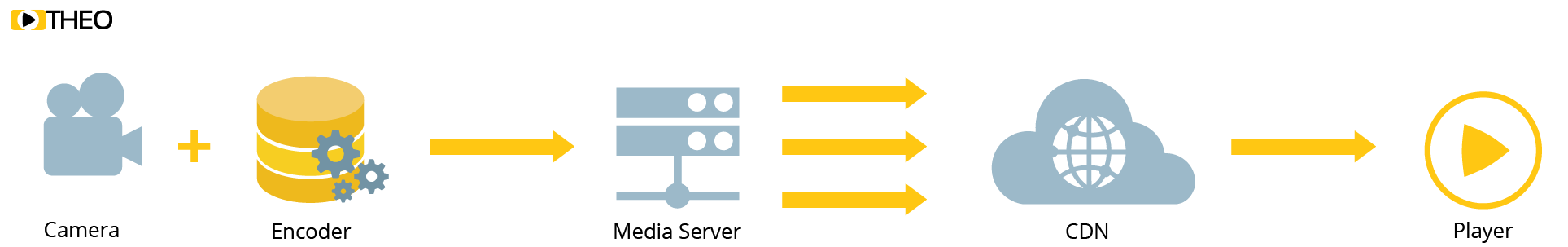

How Does an End-to-End LL-HLS Solution Work?

An LL-HLS pipeline doesn’t differ much from other live streaming E2E solutions:

-

-

An encoder will compress a stream using H.264/H.265 (HEVC)/... codecs for video and AAC/AC-3/… codecs for audio.

-

From there, content can (optionally) be sent into a (often cloud hosted) transcoder to generate a full ABR ladder.

-

Those feeds will get sent to the packager. The packager is where a big part of the magic happens in the LL-HLS case. It is responsible to prepare the correct playlists with new tags and attributes, segments and LL-HLS parts, to be offered up to an origin.

-

The origin also received some additional responsibilities from an LL-HLS perspective. There are three major changes:

-

Where in the past, HLS did not require query parameters, the new HLS version allows for specific query parameters to be sent, which influence the presented playlist.

-

The origin also has to support blocking playlist reloads and blocking preload hints, meaning it has to be able to keep a request open for a longer time.

-

Finally, HTTP2 is required to be supported on the origin.

-

-

-

-

Once content is available on an origin, a player can request the relevant media information from a content delivery network (CDN), which in turn will request it from the origin. Note that for LL-HLS to work as intended, the CDN and player must also support HTTP2.

-

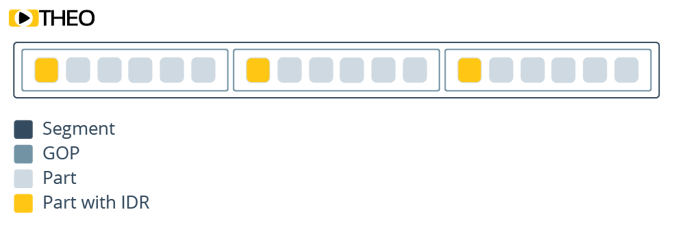

The player specifically will, of course, need to support LL-HLS, including its new playlists, executing blocking requests, and LL-HLS parts. Simplifying dramatically, for LL-HLS parts are the new segments, where players should buffer three parts (with a relevant IDR-frame) and playback can start. From there, a player remains responsible for everything it is doing from a normal HLS perspective, meaning refreshing the playlists, observing network capabilities and environments (and shifting to the correct variant stream when changes are detected), providing accessibility features such as subtitles and alternative audio tracks, … While all of these aspects have to be updated from an algorithmic perspective, if the player implements this well, it should require no change from an integration standpoint.

When should you use LL-HLS?

In situations where latency plays an important role in how viewers consume your content, LL-HLS can solve your latency problems, especially when streaming on Apple devices, LL-HLS is the best match. For example in the interactive scenario where you are following a live online lecture and you need to ask the teacher a question. If the glass-to-glass latency is high, your question may come many seconds later and the teacher could have moved to the next topic, resulting in loss of context.. Another example might be streaming a big game and hearing your neighbor cheer for a goal you haven’t seen on your screen yet, causing a spoiled experience. It’s also important to take into account second-screen viewing, or viewers that are reading updates from the game on their mobile phone and streaming the game on their TV, which can also cause a spoiled experience.

The biggest challenges we see for implementation with LL-HLS are in use cases like Server Side Ad Insertion (SSAI), Digital Rights Management (DRM) and subtitles. It is crucial to properly test if your use case requires subtitles, DRM or SSAI. As LL-HLS was finalized only recently, most vendors are not tackling these cases yet. As they represent some of the most important capabilities to make content accessible, protect and monetize, it is crucial you get this right. SSAI, subtitles, DRM, ABR and their implementation with LL-HLS are use cases we will go into more detail about in our next blog.

Another issue we still see is compatibility between server and player. There have been many versions of Low Latency HLS (you can read about the evolution of the spec in a previous blog), and the server and client must support the same version, not an older HTTP/2 PUSH-based version, or the intermediate draft version. While players and packaging vendors may have started implementation at different points in time, they might not cover all subtleties of the specification, for example the latest version making blocking playlist reload optional instead of it being mandatory in the intermediate draft version!

The Impact of Segment Size, GOP Size and Part Size

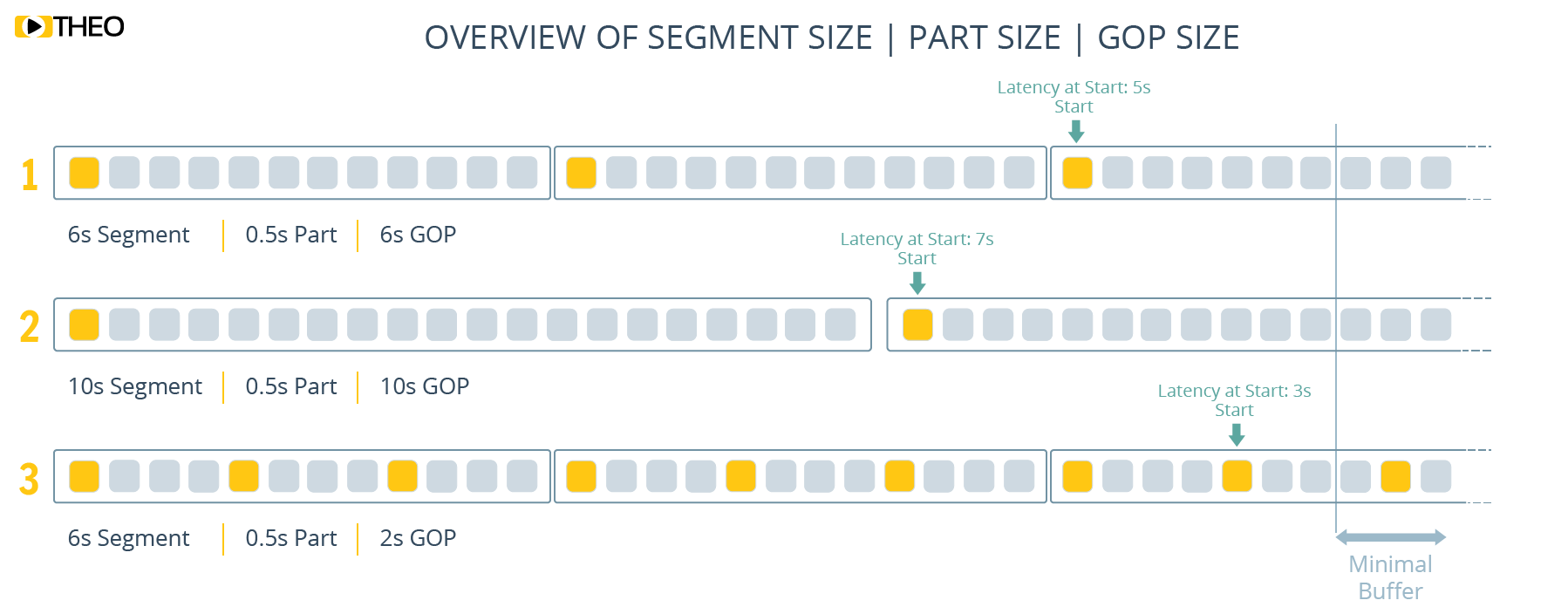

There are different parameters to keep in mind when implementing LL-HLS. In terms of latency, the three most important would be segment size, GOP size, and part size. Let’s discuss each one in a bit more detail:

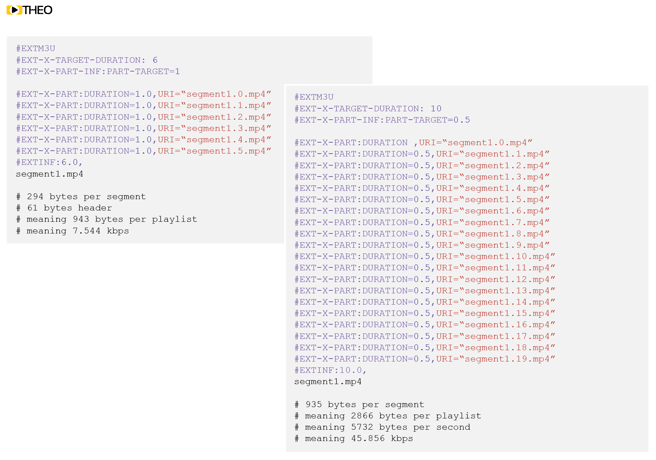

Segment Size

For LL-HLS, Apple’s recommendation for segment size seems to be 6 seconds still, same as with traditional HLS. It is important to know that segment size will not impact latency when working with LL-HLS. With traditional HLS, protocol latency could be calculated as 4 times the segment size, but this calculation is no longer valid in the low latency scenario.

Segment size does impact the amount of parts which you will need to list in your playlist. As a result, it impacts the size of the playlist (and how much data must be loaded in parallel with the media data). Having long segments can as a result significantly increase the size of the playlist, causing overhead on the network and impacting streaming quality.

Another small impact of segment size is that it should be equal to or larger than your GOP size, which we will discuss next.

GOP Size

The GOP size, or size of your Group Of Pictures is one of the main parameters which determines how often a key frame (or IDR frame) will be available. A player requires a key frame to start decoding, meaning it will impact startup of your stream. Having large GOPs with only one key frame every 6 seconds for example, will mean that your player can start playback on a position once every six seconds. This doesn’t mean your startup time will be six seconds, but it might require your player to start at a higher latency. With the 6 second example, your average additional latency at the start will be 3 seconds, or an addition to your startup time of 3 seconds with a 6 seconds worst case scenario! (Our recommendation would however be to balance this out, and have the player intelligently determine how big of a delay in startup time is acceptable for a lower latency.)

Based on this explanation, small GOP sizes seem extremely attractive. However, if you have a lot of key frames, it increases inefficiency in compression, which means you will use more bandwidth and streaming quality will go down. This effect becomes large when GOP sizes fall below 2s. The recommendation from THEO’s side would be to set your key frame interval to 2 to 3 seconds as it strikes a good balance between compression efficiency and viewer experience at startup.

Based on this explanation, small GOP sizes seem extremely attractive. However, if you have a lot of key frames, it increases inefficiency in compression, which means you will use more bandwidth and streaming quality will go down. This effect becomes large when GOP sizes fall below 2s. The recommendation from THEO’s side would be to set your key frame interval to 2 to 3 seconds as it strikes a good balance between compression efficiency and viewer experience at startup.

Part Size

Part size is the final parameter which has a very large impact on viewer experience with LL-HLS. In contrast to segment size, part size does have an impact on latency. Many people think that a smaller part size means a lower latency. This is true for part sizes larger than about 400ms. You must however keep in mind that the playlist has to be loaded for every part as well. This means reducing part size will increase metadata overhead. It also shows the delicate balance with segment size as described earlier. In field tests, we see that for part sizes smaller than 400ms latency seems to plateau as overhead becomes larger. This results in the end-to-end latency usually leveling out around 2-3 seconds. For this reason, we recommend part sizes between 400ms and 1s.

What About Buffer Size?

When talking about latency, buffer size is usually providing a large contribution. While the buffer ensures smooth playback, if the buffer is large it can bring overhead and increase latency. In LL-HLS, the buffer size largely depends on the part size. LL-HLS tells you that you should have a buffer of 3 part durations from the end of the playlist in the buffer. For example, when you have parts of 400ms, this will mean your buffer will target a size of 1.2s. Based on our tests, when your part size is higher, for example around 1 second per part, we notice buffer size can be slightly decreased without impact on user experience. As a baseline, you should never have a buffer less than 2 parts. If latency is extremely crucial for the use case, you could reduce the buffer, but then risk a bad quality of experience or buffering during playback.

How is THEO Implementing the New Specification?

At THEO, we have been closely following the LL-HLS specification. An initial version of the draft specification using H2 Push was developed, which evolved into a version with the intermediate specification, and which has now been updated to follow the final version of the specification. This player is under active testing with a wide range of different packager vendors and CDNs.

Are you a packager vendor who is interested in testing with us? Contact our LL-HLS experts today.

What's Next?

Low Latency is something that is constantly evolving, especially with LL-HLS where a lot of development is happening on all sides and keeping in mind Apple’s plan to launch it in their products in iOS 14 (set to launch in September). As the tools are not fully productized and matured yet is extremely important to test everything properly moving forward. As mentioned above, THEO currently has a beta player available that supports the most recent LL-HLS update. If you’re interested, don’t hesitate to contact our LL-HLS experts. In our next blog we will dive deeper into the implementation of LL-HLS with subtitles, ABR, SSAI and DRM.

Interested in learning more about implementing a Low Latency solution?

On-Demand Webinar: Apple’s LL-HLS is Finally Here

Hosted by our CTO Pieter-Jan Speelmans

Contact our LL-HLS Experts

Share this

- THEOplayer (46)

- online streaming (40)

- live streaming (35)

- low latency (32)

- video streaming (32)

- HESP (24)

- HLS (21)

- new features (21)

- THEO Technologies (20)

- SDK (19)

- THEOlive (17)

- best video player (17)

- cross-platform (16)

- html5 player (16)

- LL-HLS (15)

- online video (15)

- SmartTV (12)

- delivering content (12)

- MPEG-DASH (11)

- Tizen (11)

- latency (11)

- partnership (11)

- Samsung (10)

- awards (10)

- content monetisation (10)

- innovation (10)

- Big Screen (9)

- CDN (9)

- High Efficiency Streaming Protocol (9)

- fast zapping (9)

- video codec (9)

- SSAI (8)

- Ultra Low Latency (8)

- WebOS (8)

- advertising (8)

- viewers expercience (8)

- "content delivery" (7)

- Adobe flash (7)

- LG (7)

- Online Advertising (7)

- Streaming Media Readers' Choice Awards (7)

- html5 (7)

- low bandwidth (7)

- Apple (6)

- CMAF (6)

- Efficiency (6)

- Events (6)

- drm (6)

- interactive video (6)

- sports streaming (6)

- video content (6)

- viewer experience (6)

- ABR (5)

- Bandwidth Usage (5)

- Deloitte (5)

- HTTP (5)

- ad revenue (5)

- adaptive bitrate (5)

- nomination (5)

- reduce buffering (5)

- release (5)

- roku (5)

- sports betting (5)

- video monetization (5)

- AV1 (4)

- DVR (4)

- Encoding (4)

- THEO Technologies Partner Success Team (4)

- Update (4)

- case study (4)

- client-side ad insertion (4)

- content encryption (4)

- content protection (4)

- fast 50 (4)

- google (4)

- monetization (4)

- nab show (4)

- streaming media west (4)

- support matrix (4)

- AES-128 (3)

- Chrome (3)

- Cost Efficient (3)

- H.265 (3)

- HESP Alliance (3)

- HEVC (3)

- IBC (3)

- IBC trade show (3)

- React Native SDK (3)

- THEOplayer Partner Success Team (3)

- VMAP (3)

- VOD (3)

- Year Award (3)

- content integration (3)

- customer case (3)

- customise feature (3)

- dynamic ad insertion (3)

- scalable (3)

- server-side ad insertion (3)

- video (3)

- video trends (3)

- webRTC (3)

- "network api" (2)

- Amino Technologies (2)

- Android TV (2)

- CSI Awards (2)

- Encryption (2)

- FireTV (2)

- H.264 (2)

- LHLS (2)

- LL-DASH (2)

- MPEG (2)

- Microsoft Silverlight (2)

- NAB (2)

- OMID (2)

- Press Release (2)

- React Native (2)

- Start-Up Times (2)

- UI (2)

- VAST (2)

- VP9 (2)

- VPAID (2)

- VPAID2.0 (2)

- ad block detection (2)

- ad blocking (2)

- adobe (2)

- ads in HTML5 (2)

- analytics (2)

- android (2)

- captions (2)

- chromecast (2)

- chromecast support (2)

- clipping (2)

- closed captions (2)

- deloitte rising star (2)

- fast500 (2)

- frame accurate clipping (2)

- frame accurate seeking (2)

- metadata (2)

- multiple audio (2)

- playback speed (2)

- plugin-free (2)

- pricing (2)

- seamless transition (2)

- server-side ad replacement (2)

- subtitles (2)

- video publishers (2)

- viewer engagement (2)

- wowza (2)

- "smooth playback" (1)

- 360 Video (1)

- AOM (1)

- API (1)

- BVE (1)

- Best of Show (1)

- CEA-608 (1)

- CEA-708 (1)

- CORS (1)

- DIY (1)

- Edge (1)

- FCC (1)

- HLS stream (1)

- Hudl (1)

- LCEVC (1)

- Microsoft Azure Media Services (1)

- Monoscopic (1)

- NAB Show 2016 (1)

- NPM (1)

- NetOn.Live (1)

- OTT (1)

- Periscope (1)

- Real-time (1)

- SGAI (1)

- SIMID (1)

- Scale Up of the Year award (1)

- Seeking (1)

- Stereoscopic (1)

- Swisscom (1)

- TVB Europe (1)

- Tech Startup Day (1)

- Telenet (1)

- Uncategorized (1)

- University of Manitoba (1)

- User Interface (1)

- VR (1)

- VR180 (1)

- Vivaldi support (1)

- Vualto (1)

- adblock detection (1)

- apple tv (1)

- audio (1)

- autoplay (1)

- cloud (1)

- company news (1)

- facebook html5 (1)

- faster ABR (1)

- fmp4 (1)

- hiring (1)

- iGameMedia (1)

- iOS (1)

- iOS SDK (1)

- iPadOS (1)

- id3 (1)

- language localisation (1)

- micro moments (1)

- mobile ad (1)

- nagasoft (1)

- new web browser (1)

- offline playback (1)

- preloading (1)

- program-date-time (1)

- server-guided ad insertion (1)

- stream problems (1)

- streaming media east (1)

- support organization (1)

- thumbnails (1)

- use case (1)

- video clipping (1)

- video recording (1)

- video trends in 2016 (1)

- visibility (1)

- vulnerabilities (1)

- zero-day exploit (1)

- November 2024 (1)

- August 2024 (1)

- July 2024 (1)

- January 2024 (1)

- December 2023 (2)

- September 2023 (1)

- July 2023 (2)

- June 2023 (1)

- April 2023 (4)

- March 2023 (2)

- December 2022 (1)

- September 2022 (4)

- July 2022 (2)

- June 2022 (3)

- April 2022 (3)

- March 2022 (1)

- February 2022 (1)

- January 2022 (1)

- November 2021 (1)

- October 2021 (3)

- September 2021 (3)

- August 2021 (1)

- July 2021 (1)

- June 2021 (1)

- May 2021 (8)

- April 2021 (4)

- March 2021 (6)

- February 2021 (10)

- January 2021 (4)

- December 2020 (1)

- November 2020 (1)

- October 2020 (1)

- September 2020 (3)

- August 2020 (1)

- July 2020 (3)

- June 2020 (3)

- May 2020 (1)

- April 2020 (3)

- March 2020 (4)

- February 2020 (1)

- January 2020 (3)

- December 2019 (4)

- November 2019 (4)

- October 2019 (1)

- September 2019 (4)

- August 2019 (2)

- June 2019 (1)

- December 2018 (1)

- November 2018 (3)

- October 2018 (1)

- August 2018 (4)

- July 2018 (2)

- June 2018 (2)

- April 2018 (1)

- March 2018 (3)

- February 2018 (2)

- January 2018 (2)

- December 2017 (1)

- November 2017 (1)

- October 2017 (1)

- September 2017 (2)

- August 2017 (3)

- May 2017 (3)

- April 2017 (1)

- March 2017 (1)

- February 2017 (1)

- December 2016 (1)

- November 2016 (3)

- October 2016 (2)

- September 2016 (4)

- August 2016 (3)

- July 2016 (1)

- May 2016 (2)

- April 2016 (4)

- March 2016 (2)

- February 2016 (4)

- January 2016 (2)

- December 2015 (1)

- November 2015 (2)

- October 2015 (5)

- August 2015 (3)

- July 2015 (1)

- May 2015 (1)

- March 2015 (2)

- January 2015 (2)

- September 2014 (1)

- August 2014 (1)